In my recent article, What is Observability, I discussed how observability is crucial for understanding complex architectures and their interactions and dependencies between different system components. Data Observability, unlike Software Observability, aims to identify the essential data needed for a comprehensive understanding. It relies on four pillars: metrics, metadata, lineage, and logs, with data lineage being vital. This entails documenting and visually representing the data’s journey from source to consumption, including tracking transformations, interpreting changes, and understanding their reasons.

A Winning Data Quality Strategy

A vital component of a Data Architecture strategy involves elevating data quality to a high priority within the business, leading to improved sales forecasts, better customer experiences, and more insightful business intelligence. Consequences stemming from poor-quality data include loss of income, inaccurate analytics, potential fines for privacy infractions, reduced efficiency, missed opportunities, and wasted time and money. Poor data quality also results in reputational damage and customer distrust.

When Data Quality is at the forefront of a Data Management strategy, everyone benefits – data engineers, data scientists, CDOs, CIOs/CTOs, data privacy officers (DPOs), and chief information security officers (CISOs) from the richer view of their data assets. To ensure data flows are monitored appropriately for loss of quality, Data Observability shines a light on what would be a blind spot.

How To Define Data Quality Within Your Business

Alignment of data quality nomenclature, definitions, and datasets can bring disparate business units together, allowing for a unified understanding of the design approach to a data quality program. Furthermore, engaging with the right stakeholders and showing them the path to success vs telling them will make a difference in garnering buy-in from the onset. A few ways to approach this mindset are the following:

- Clearly articulate business requirements through discussions with stakeholders. Data may not be flawless; it’s inadequate to meet specific use cases.

- Assess how your existing data aligns with those requirements. Data can deviate in numerous ways, so identifying gaps helps pinpoint the most easily addressed issues.

- Evolve consistently by implementing established methods such as clear ownership, standardized development processes, monitoring, education, etc. Although GenAI is a recent addition, we can draw upon decades of proven data governance best practices.

Ensuring Data Quality with Datavolo

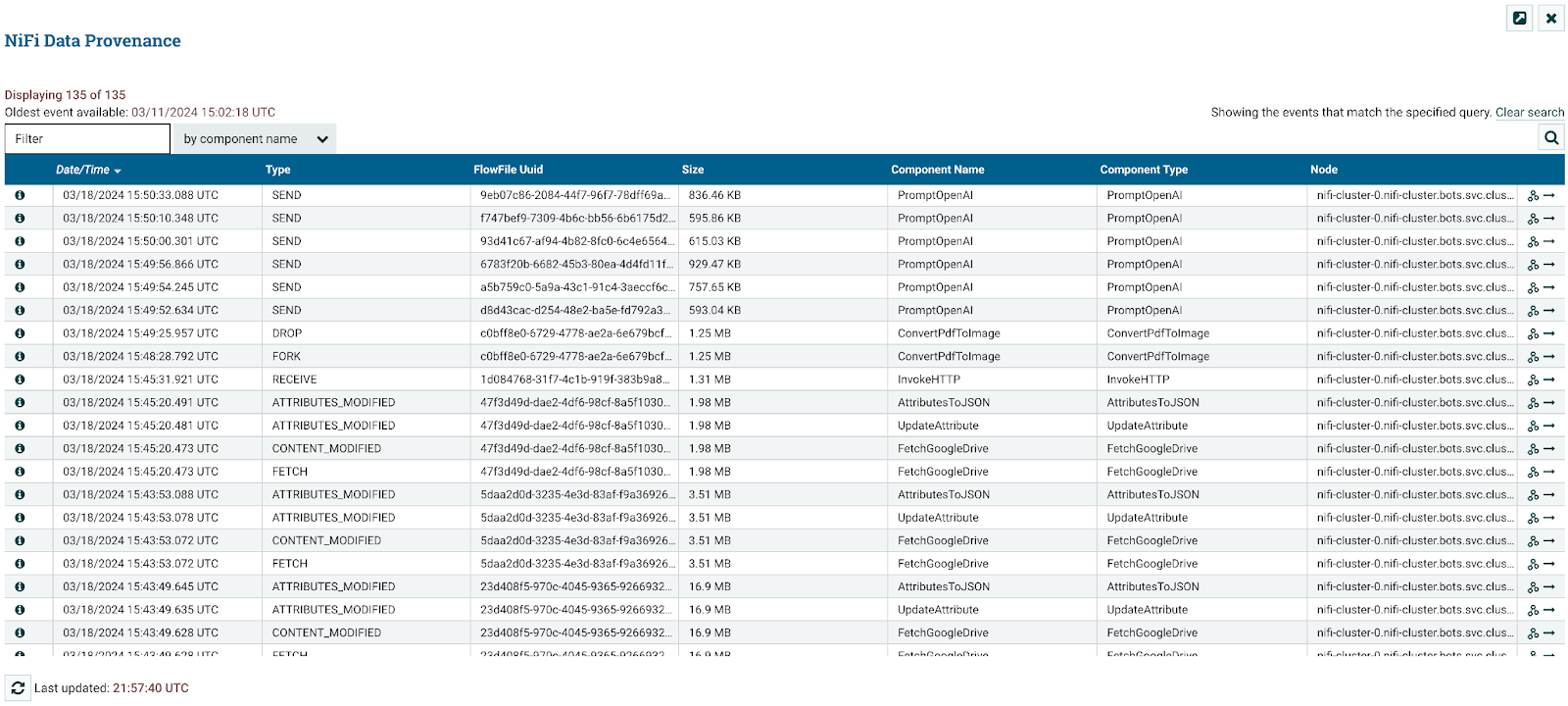

Apache NiFi is an open-source data integration tool that automates system data flow. While its primary focus is on data ingestion and movement, NiFi provides features and capabilities that contribute to ensuring data quality within data pipelines. Figure 1 below shows the details of NiFi’s Data Provenance Repository.

The creators of Apache NiFi founded Datavolo to enhance data observability capabilities and take it to the next level with the advent of GenAI.

Figure 1: NiFi Data Provenance

Implementing Data Observability

Datavolo, powered by Apache NiFi, excels tremendously within the data observability landscape. NiFi was purposely built to uphold the Chain of Custody of each piece of data (also known as data provenance) within the Provenance Repository, where the history of each FlowFile is stored. Listed below are multiple ways in which NiFi addresses data quality concerns:

- Data Provenance:

- NiFi maintains a detailed data provenance system that tracks data lineage as it moves through it. This includes information about each piece of data’s origin, transformations, and destinations.

- Data provenance helps in auditing and understanding the journey of data, making it easier to identify and trace any issues related to data quality.

- Data Cleansing:

- NiFi enables the integration of processors that perform data cleansing operations, such as removing duplicates, correcting errors, or transforming data to conform to desired standards.

- These processors help improve data quality by addressing common issues in the data.

- Data Validation and Transformation:

- NiFi processors can use schema registries or custom logic to check incoming data, ensuring it meets specific quality standards. This validation prevents issues related to mismatched data types or structures.

- Transformation processors can clean, enrich, or standardize the data, improving data quality.

- Data Profiling:

- NiFi facilitates using processors for data profiling, allowing users to analyze the pipeline’s characteristics and data distribution.

- Profiling helps understand data patterns, identify outliers, and detect potential issues or anomalies affecting data quality.

- Error Handling:

- NiFi provides robust error-handling and retry capabilities, allowing users to define how errors should be managed within the data flow. This includes strategies for handling validation errors, connectivity issues, or other failures.

- Users can configure processors to route invalid or erroneous data to separate paths for further analysis or correction.

- Logging:

- Logging capabilities allow for detailed tracking of errors, warnings, and other events, providing visibility into the health and quality of the data pipeline.

- Monitoring and Alerts:

- NiFi offers monitoring features that allow users to monitor the health and performance of data flows. These features include metrics on throughput, latency, and error rates.

- Users can build pipelines to send alerts based on various thresholds, enabling proactive identification of issues that may impact data quality.

- Quality-of-Service (QoS) Prioritization:

- NiFi allows users to prioritize data flows based on quality-of-service requirements. This ensures that high-priority data is processed and delivered with greater attention, reducing the likelihood of data quality issues.

- Feedback Loops:

- NiFi supports feedback loops by allowing users to route data back into the pipeline for reprocessing or correction. This iterative approach can be valuable for refining data quality over time.

Datavolo has the ability to integrate with existing data catalogs, and schema registries allowing for a “shift left” mindset to enhance data quality throughout the early stages of acquisition within the pipeline. In doing so, several principles come into focus when working with data:

- Precision: Greater alignment of data with actuality signifies higher accuracy.

- Completeness: Ensure all relevant data is included and accounted for.

- Consistency: Avoid inconsistencies, identify accurate data while removing inaccurate entries.

- Uniqueness: Data should accurately represent reality and avoid duplication.

- Timeliness: Availability and accessibility of data is paramount for information accuracy.

Summary

In data observability, metrics reveal internal data characteristics, while metadata clarifies external data features like origin and structure. Logs chronicle system actions, aiding monitoring and auditing. By incorporating these features, Datavolo, powered by Apache NiFi, facilitates the establishment of robust data quality practices within data pipelines, ensuring that data is accurate, reliable, and meets the desired standards as it traverses the system.

These pillars collectively enhance data quality and understanding. Security, auditing, and data tracking are foundational to NiFi. Datavolo provides a secure and compliant infrastructure with a robust provenance system.