In the past week, since Datavolo released its Flow Generation capability, we’ve witnessed fantastic adoption as users have eagerly requested flows from the Flow Generation bot. We’re excited to share that we have recently upgraded our models, enhancing both the power and accuracy of flow generation. Additionally, we’ve introduced several key new features.

One of the most notable improvements is the enhanced accuracy of the model. Specifically, we have refined the process of selecting the most relevant Processors for sources and sinks. Furthermore, we have fine-tuned the logic for identifying the appropriate Controller Service for your specific use case.

The significant improvements in accuracy alone have justified the release of a new model version. The flow requests we’ve received through Slack have been incredibly insightful and have led us to enable additional capabilities. For instance, you can now request the Flow Generator to create a flow that utilizes NiFi’s Stateless Execution Engine. This engine offers various runtime trade-offs, most notably shifting the “transactional boundary” from the Processor level to the Process Group level. This allows for the consumption of messages from durable stores like Apache Kafka, JMS, or Amazon Kinesis without acknowledging the messages until processing is complete. Consequently, messages will be redelivered in case of processing failures.

The ability to have NiFi process a single FlowFile at a time can be achieved through several approaches. The Flow Generation bot can now handle this task for you if you request it. For example, you might ask it to “Create a flow that <insert processing logic>… Only process one file at a time.”

At Datavolo, we are dedicated to providing the best and most accurate flows possible. However, we acknowledge that anything generated using Generative AI may introduce inaccuracies. Moreover, we recognize that accuracy can vary under different circumstances. We have enhanced our bot to make these particular circumstances more transparent.

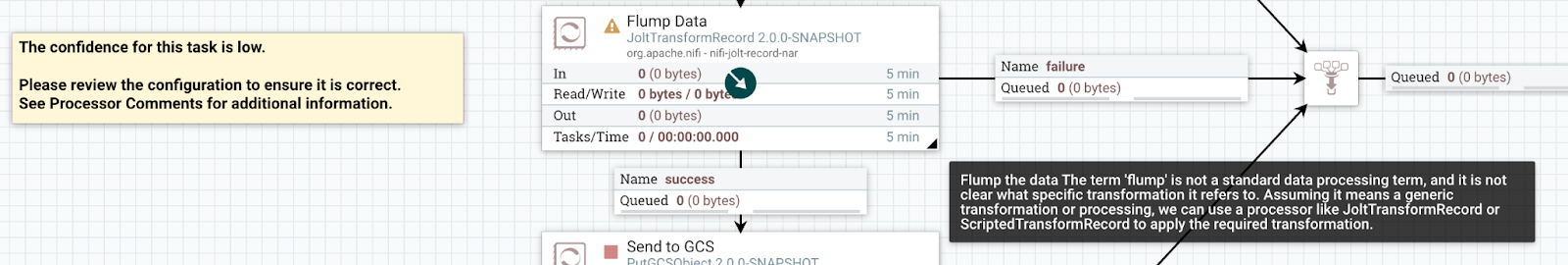

If the bot cannot find a suitable Processor for a specific task, we will now convey this information, along with helpful insights, in the Slack message. In other cases, the model will select a Processor and indicate in the NiFi flow that this particular Processor should be carefully reviewed. For instance, consider a scenario where there is a typo in your message, and you ask the bot to “Generate a flow that fetches data from S3, flumps the data, and then sends it to GCS.”

The model may omit the step mentioning “flumping the data” and include a warning in the message, such as “The term ‘flump’ is not a standard data processing term, and it is not clear what specific transformation it refers to. Assuming it means a generic transformation or processing, we can use a processor like JoltTransformRecord or ScriptedTransformRecord to apply the required transformation.” Alternatively, it may insert a JoltTransformRecord Processor and prominently label it with this warning:

Even without typos, there may be situations where the model’s confidence is low. For instance, if you ask it to send data to an endpoint it is not familiar with or perform a transformation that the model is uncertain about.

While flow generation is a powerful capability on its own, the ability to swiftly identify and highlight areas that require particular attention translates into even faster time to production!

We are thrilled not only to offer this capability but also to see many users embracing it eagerly, and witnessing improvements emerging rapidly. If you haven’t already, we invite you to join our Slack Community and experience this capability for yourself!