Datavolo's blog

What's in Datavolo's blog? Insights and inspiration, case studies and community for AI/ML and Data Engineers. Discover what we are talking about everyday here at Datavolo!

What is LLM Insecure Output Handling?

The Open Worldwide Application Security Project (OWASP) states that insecure output handling neglects to validate large language model (LLM) outputs that may lead to downstream security exploits, including code execution that compromises systems and exposes data. This...

Data Ingestion Strategies for GenAI Pipelines

You did it! You finally led the charge and persuaded your boss to let your team start working on a new generative AI application at work and you’re psyched to get started. You get your data and start the ingestion process but right when you think you’ve nailed it, you...

How we use the Kubernetes Operator pattern

Organizations using NiFi for business-critical workloads have deep automation, orchestration, and security needs that Kubernetes by itself cannot support. In this second installment of our Kubernetes series, we explore how the Kubernetes Operator pattern alleviates...

Constructing Apache NiFi Clusters on Kubernetes

Introduction Clustering is a core capability of Apache NiFi. Clustered deployments support centralized configuration and distributed processing. NiFi 1.0.0 introduced clustering based on Apache ZooKeeper for coordinated leader election and shared state tracking. Among...

Prompt Injection Attack Explained

By now, it’s no surprise that we’ve all heard about prompt injection attacks affecting Large Language Models (LLMs). Since November 2023, prompt injection attacks have been wreaking havoc on many in house built chatbots and homegrown large language models. But what is...

Onward with ONNX® – How We Did It

Digging into new AI models is one of the most exciting parts of my job here at Datavolo. However, having a new toy to play with can easily be overshadowed by the large assortment of issues that come up when you’re moving your code from your laptop to a production...

Tutorial – How to Convert to ONNX®

Converting from Pytorch/Safetensors to ONNX® Given the advantages described in Onward With ONNX® we’ve taken the opinion that if it runs on ONNX that’s the way we want to go. So while ONNX has a large model zoo we’ve had to convert a few models by hand. Many models...

Survey Findings – Evolving Apache NiFi

Survey of long time users to understand NiFi usage Datavolo empowers and enables the 10X Data Engineer. Today's 10X Data Engineer has to know about and tame unstructured and multi-modal data. Our core technology, Apache NiFi, has nearly 18 years of development,...

Generative AI – State of the Market – June 17, 2024

GenAI in the enterprise is still in its infancy. The excitement and potential is undeniable. However, enterprises have struggled to derive material value from GenAI and the hype surrounding this technology is waning. We have talked with hundreds of organizations...

Secure Data Pipeline Observability in Minutes

Monitoring data flows for Apache NiFi has evolved quite a bit since its inception. What started generally with logs and processors sprinkled throughout the pipeline grew to Prometheus REST APIs and a variety of Reporting Tasks. These components pushed NiFi closer to...

How to Package and Deploy Python Processors for Apache NiFi

Introduction Support for Processors in native Python is one of the most notable new features in Apache NiFi 2. Each milestone version of NiFi 2.0.0 has enhanced Python integration, with milestone 3 introducing support for loading Python Processors from NiFi Archive...

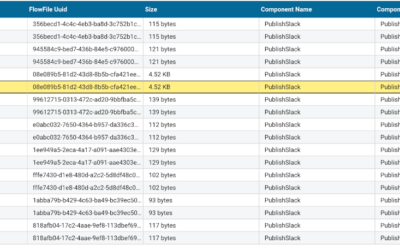

Troubleshooting Custom NiFi Processors with Data Provenance and Logs

We at Datavolo like to drink our own champagne, building internal tooling and operational workflows on top of the Datavolo Runtime, our distribution of Apache NiFi. We’ve written about several of these services, including our observability pipeline and Slack chatbots....