What is Apache NiFi?

Apache NiFi is an open-source platform that automates the movement of data between different data sources and systems for storing, processing, and analyzing data.

The US National Security Agency (NSA) created the technology that is now Apache NiFi to ingest large volumes of unstructured data, move it through a data ingestion pipeline into storage, and analyze it in real-time. The NSA contributed the code to the Apache Software Foundation in 2014, making it open-source. Since then, a community of developers built NiFi into the premier open-source data engineering platform for data pipeline automation.

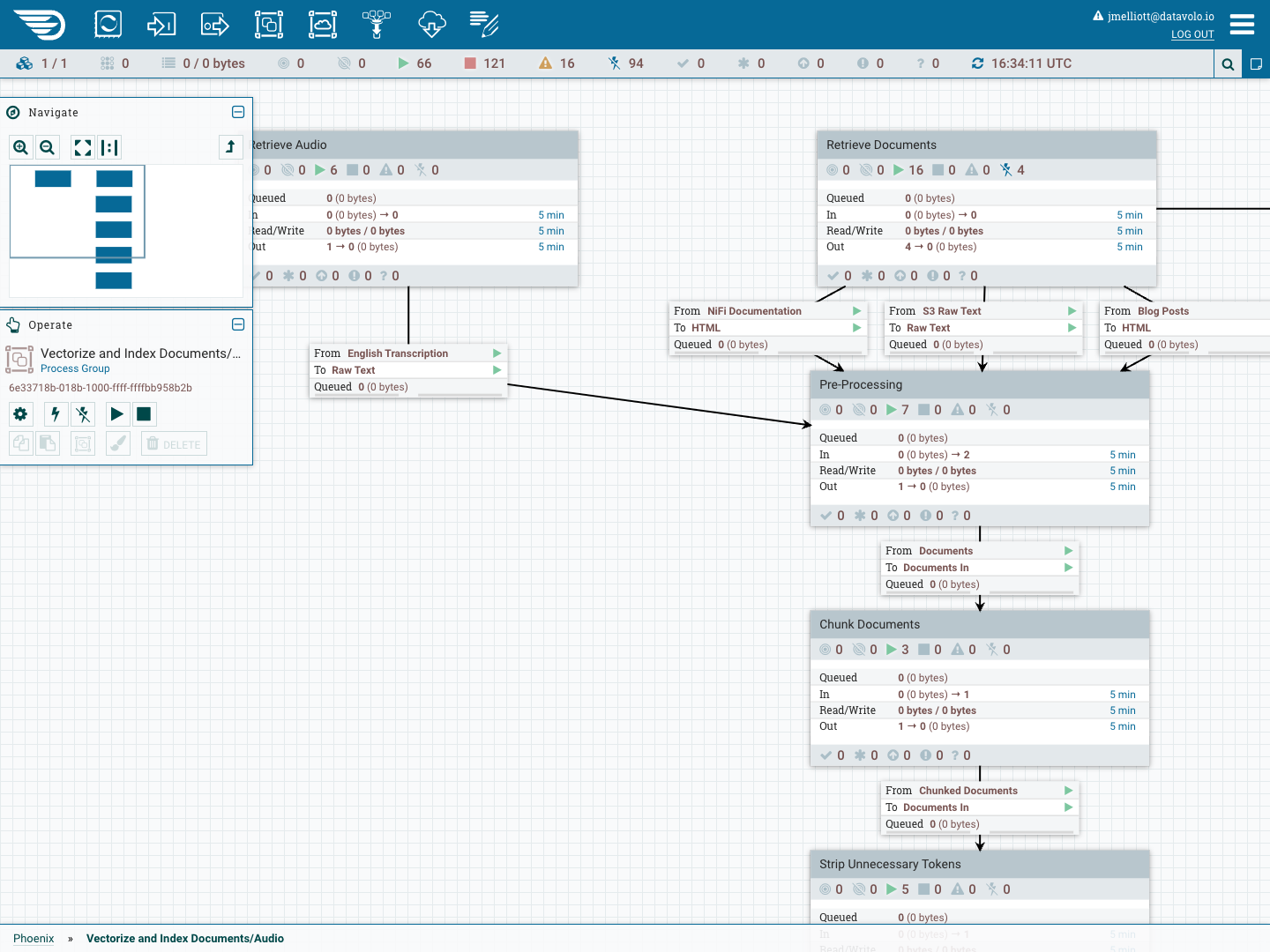

NiFi’s graphical interface makes it easy for data engineering teams to capture data at any endpoint, ingest it, and transform it, while NiFi routes their important data for storage, analysis, or scalable machine learning. Data Engineers visualize data workflow management in NiFi and can track data lineage as the data transforms at each stage in the pipeline. This ensures that the data is accurate and reliable before it is used to train scalable machine learning models or GenerativeAI applications. Data provenance and transformations can be easily traced and audited to maintain confidence in NiFi’s end-to-end data ingestion pipeline.

NiFi makes data configuration easy and data pipeline automation visual and interactive. Unlike legacy data engineering platforms that rely on brittle ETL pipelines, NiFi lets data engineers change configurations quickly and easily through a web browser. This gives everyone confidence that as data changes or new sources emerge, the AI or analytic systems depending on that data will not fail.

In fact, NiFi’s extensible, open-source architecture integrates easily with unstructured text, multimedia or sensor data sources, as well as structured data. This flexibility and adaptability empowers data engineers, data scientists, and developers to deliver insights without questioning the underlying data quality or provenance. Teams can easily collaborate on flows for authorship, testing, and operations.

How Does Apache NiFi Work?

Apache NiFi connects to data sources, tracks lineage, configures pipelines, secures data in motion, and visualizes data flows.

Connecting Apache NiFi to Multimodal Data

One of Apache NiFi’s superpowers is its ability to connect to a wide range of data sources, using protocols such as HTTP, FTP, JDBC, and Kafka. Common points of connection include:

- Vector databases like Pinecone;

- Document stores like Sharepoint, Dropbox, and Box

- File systems like HDFS or S3;

- Cloud storage like Amazon S3, Azure Blob Storage, or GCS;

- Databases like PostgreSQL or Oracle;

- Data warehouses like Snowflake, Google BigQuery, and Databricks

- Delta Tables;

- IoT devices;

- APIs and web services;

- Messaging systems like Kafka or JMS; and

- Log files.

NiFi can connect to all of these sources and others via pre-built NiFi processors, or to proprietary, internal, or legacy data sources with custom processors and connectors. Diverse types of data make analytics richer and scalable machine learning more predictive. NiFi allows data science teams to integrate that diverse data directly into their data ecosystems, without having to write code to ingest and transform data. Nor do they need to learn new languages.

Lineage Tracking Across the Ingestion Pipeline

Apache NiFi tags each data packet with metadata as it flows through the system, allowing users to trace data provenance through the entire data ingestion pipeline.

That metadata could be about the data’s origin, where it’s headed, or how it is transformed at each step in data workflow management.

Provenance tracking gives NiFi users insights into data lineage that they use to manage data auditing, lineage analysis or troubleshooting to assure compliance with data governance policies or regulations.

NiFi’s FlowFile Repository manages and stores data flowing through the system. These are FlowFiles. NiFi holds them in a HashMap in memory, which speeds performance. The FlowFile Repository stores metadata about each FlowFile, such as file size, content type, and lineage information. As the FlowFiles are flowing through the system, each change is logged in the FlowFile Repository. NiFi also includes mechanisms for queuing, prioritizing, and routing FlowFiles to various processors for transformation, enrichment, or routing.

NiFi Data Pipeline Automation and Configuration

Data engineers and data architects use their expertise for complex data workflow management. The time those experts spend building or maintaining data pipelines is time taken away from identifying and ingesting new data streams.

Apache NiFi’s data engineering platform empowers data engineers to effortlessly design, configure, and maintain data workflows so that they can focus more on the big picture and think about the data they haven’t ingested yet.

NiFi Security Applied to Data Pipeline Automation

NiFi was born in the National Security Agency, whose purpose is to gather and protect sensitive data. For this reason, safeguarding data transport and communication has been a fundamental requirement since the early days at the NSA, when today’s Apache NiFi was still known as “Niagara Files”. To protect sensitive data and ensure compliance with privacy regulations, today’s NiFi includes encryption, authentication, and authorization mechanisms.

For encryption in-transit, NiFi uses Transport Layer Security (TLS) and Secure Shell (SSH) protocols. Those protocols protect data in NiFi from unauthorized access and interception in transit.

For user authentication, NiFi comes with options like LDAP, Kerberos, OpenID Connect (OIDC), client certificates, username/password authentication, and SAML for single sign-on that allow admins to verify client identities and prevent unauthorized access to data flows.

Finally, for user authorization, NiFi offers role-based access control (RBAC) that defines roles with specific permissions for users and groups. It also comes with multi-tenant authorization capabilities, allowing admins to partition data flows into isolated environments. A detailed audit trail of user activities helps organizations track user access and identify security breaches.

Tying all of these security measures to the larger data ecosystem is crucial. NiFi easily integrates with third-party authentication and directory services, inheriting much of the security measures already provided by pre-existing security infrastructures.

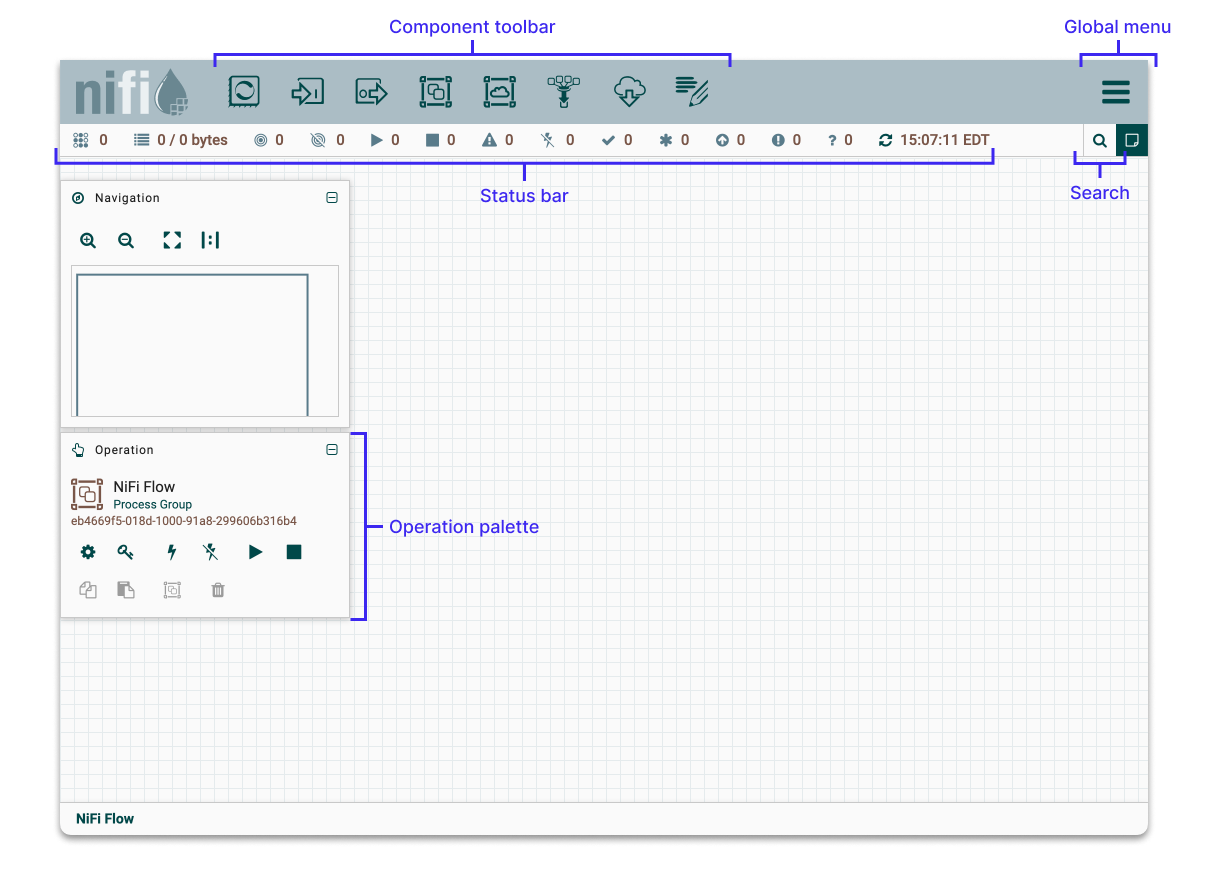

The NiFi UI for Data Pipeline Visualization

NiFi’s architects created an intuitive user interface to empower data engineers and with the ability to effortlessly design, control, monitor, and maintain data pipelines. NiFi’s web dashboards provide a drag-and-drop canvas that makes data workflow management far easier and more error-proof for data engineers. Then they can monitor data flows in real-time, with the tools they need to adjust workflows and optimize performance.

Because the NiFi interface provides dashboards for real-time monitoring, data engineers see immediately when they need to make adjustments. Configuration dialogs give them granular control to adjust settings and make modifications. With NiFi’s user-friendly interface, data engineers can efficiently orchestrate data movement, transformation, and integration within the larger data ecosystem. This is where Apache NiFi’s open-source nature is especially helpful.

Common Apache NiFi Use Cases By Industry

Apache NiFi transforms a data engineer’s three most important jobs: data pipeline automation, infrastructure management, and quality assurance. Making those jobs faster, easier, and more secure provides more or less business value, depending on the organization’s use cases. Those use cases are determined by industry, because types of data vary by industry.

Every industry uses NiFi for data pipeline automation, and some use cases are shared across different industries, but companies in the financial services, manufacturing and software sectors are particularly eager users of Apache NiFi. This has to do with the critical business value that certain use cases and types of data hold for those industries.

Apache NiFi In Financial Services

It goes without saying that banks and capital markets firms are awash in data, and that data changes at a massive scale every second. Much of that data is structured data. For example, account holder or household data includes predictable data elements that can be stored in columns and rows of predictable data types.

But the vast majority of financial services data–especially the data with the most potential to move markets or risk regulatory issues–is unstructured. And remember: Datavolo co-founder Joe Witt and the rest of the NSA team that architected Niagara Files (now Apache NiFi) designed it for the challenges posed by unstructured data.

In finance, these important forms of unstructured data include:

- Email communications with clients or regulators;

- Social media comments, posts, and interactions;

- Call center transcripts of customer service calls;

- News articles and press releases;

- Research including analyst reports, market research, and industry studies;

- Financial statements, including narrative disclosures and footnotes;

- Academic research papers or industry white papers, and technical documents;

- Legal documents and contracts,

- Web pages including online forums, or

- Files attached to emails, such as PDFs, spreadsheets, and presentations.

For these reasons, data engineers at financial institutions turn to Apache NiFi for use cases like fraud detection and prevention, regulatory compliance and risk management, and business intelligence for market research and performance optimization.

Apache NiFi In Manufacturing

It goes without saying that banks and capital markets firms are awash in data, and that data changes at a massive scale every second. Much of that data is structured data. For example, account holder or household data includes predictable data elements that can be stored in columns and rows of predictable data types.

But the vast majority of financial services data–especially the data with the most potential to move markets or risk regulatory issues–is unstructured. And remember: Datavolo co-founder Joe Witt and the rest of the NSA team that architected Niagara Files (now Apache NiFi) designed it for the challenges posed by unstructured data.

NiFi data ingestion pipelines in manufacturing typically manage these types of unstructured data:

- Sensor data collected from IoT sensors embedded in machinery, equipment, and production lines;

- Maintenance logs, including repair logs, maintenance schedules, and service reports, offer valuable information on equipment uptime, reliability, and maintenance costs.

- Inspection reports from quality control studies;

- Supply chain records that include purchase orders, invoices, shipping documents, and customs forms;

- Engineering drawings and CAD models;

- Work Instructions like standard operating procedures (SOPs);

- Email communications between employees, suppliers, and customers;

- Production reports detailing measures like production output, cycle times, downtime, and yield rates;

- Employee training materials; or

- Maintenance manuals, guides, and technical documentation.

Datavolo works with manufacturers on data workflow management for key use cases like real-time equipment monitoring, preventative maintenance, supply chain optimization, and quality control.

Apache NiFi In Software

With the emergence of real-time data processing and the recent boom in generative AI (GenAI), large and startup software companies are integrating their products with Apache NiFi’s open-source architecture. Here are some of the ways that software and technology providers use NiFi:

- Data ingestion of diverse data sources to collect and process large volumes of data for analytics or scalable machine learning,

- Data lineage and provenance, allowing software companies to trace the origin, transformation, and destination of data;

- Real-time data processing that can be included in their product offerings;

- Data transformation while ensuring data quality and consistency;

- Seamless data integration between disparate systems and applications;

- Event-driven architectures, for products that need to react to events in real-time;

- Workflow automation for data processing;

- Performance monitoring and alerts;

- Scalability and resilience as they scale horizontally and vertically; and

- Security and compliance that helps software companies secure their data pipelines in compliance with regulatory requirements and industry standards.

Our founders and team come from the NSA, Onyara, Hortonworks, Cloudera, Unravel Data, Amazon Web Services, Google, and other major software companies. We’ve been helping software companies at all stages with data pipeline automation for decades.