Comparing Datavolo to Apache NiFi

Datavolo’s founders are the original architects of Apache NiFi. Datavolo is based on Apache NiFi. Our team members are Project Management Committee members and committers to the Nifi project, and we regularly commit code developed at Datavolo back into the Apache NiFi open-source core. Apache NiFi is a key part of the Datavolo platform, but the two are not one and the same.

Datavolo is to GenAI what NiFi is to analytics. Apache NiFi was created to capture, transform, and distribute data for analytics. We created Datavolo for the different–but related–purpose of training large language models (LLMs) based upon their data as well as delivering data in a format best consumable by modern AI system architectures. One prominent example is Retrieval Augmented Generation (RAG) which depends on the data being parsed, chunked, and vectorized to be appropriately used by AI models.

This page compares open-source Apache NiFi to Datavolo across three feature categories:

- Observability architecture,

- Fueling generative AI applications, and

- Deployment and SDLC.

This comparison table provides a breakdown of feature differences in those three areas. Read on below the table for more detail on those differences and why they matter to data engineers, developers, or administrators.

|

Datavolo |

Apache NiFi |

|

| Observability Architecture | ||

| Low-code visual interface | ✅ | ✅ |

| Real-time monitoring and alerts | ✅ | ✅ |

| OpenTelemetry collection capabilities | ✅ | ✅ |

| Flow creation powered by GenAI | ✅ | — |

| Fueling Generative AI Applications | ||

| Data engineering for advanced RAG patterns | ✅ | — |

| Machine learning services for document intelligence | ✅ | — |

| Extensibility with custom Python processors | ✅ | ✅ |

| Deployment & SDLC | ||

| Production-ready GenAI integrations | ✅ | — |

| Secure containerization | ✅ | — |

| Cloud-native architecture | ✅ | — |

| Secure management for custom code | ✅ | — |

The Observability Architecture for Data Engineers

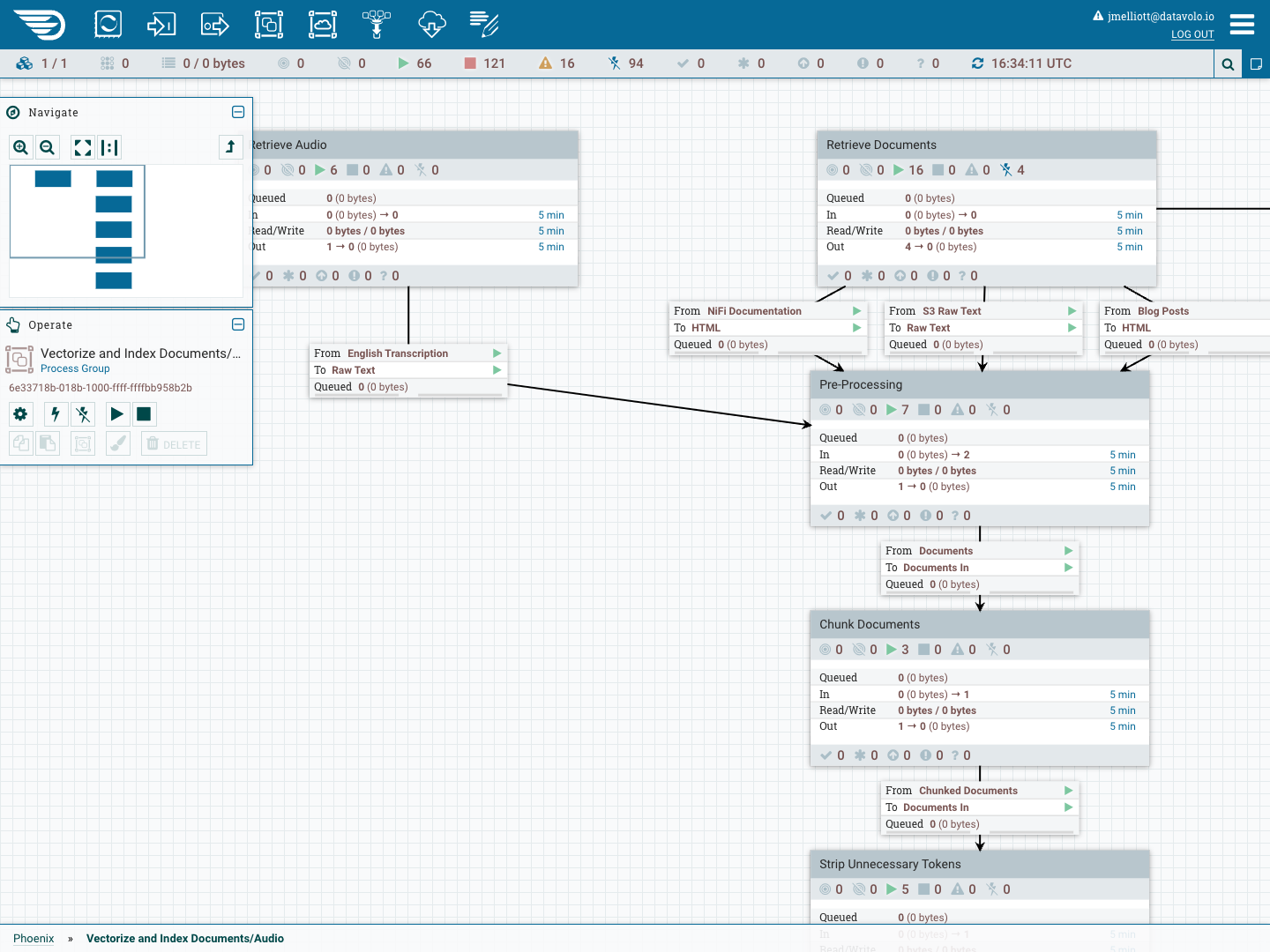

Let’s begin with the observability architecture that is shared between Apache NiFi and Datavolo. To be clear, there is no difference between Apache NiFi and Datavolo, when it comes to the visual experience and the observability architecture that they share. This is an area where Datavolo architects are continuously giving code back to Apache NiFi. In fact, the Datavolo team released the updates it developed for a new user experience into Apache NiFi version 2.0. Because Datavolo’s observability makes data engineers 10X more productive, they can better meet the demand for new data pipelines, and they end up loving the visual experience of using Datavolo!

A Low-Code Visual Interface for the Best Data Pipeline Tools

Datavolo’s low-code visual interface makes it accessible to users with varying technical skills. The intuitive drag-and-drop interface allows users to design complex workflows without extensive coding knowledge, significantly reducing time and effort to implement machine learning and data processing tasks.

Datavolo’s low-code, visual approach to managing data pipelines stays in sync with Apache NiFi and the work of the larger open-source community. Its user-friendly interface makes it an ideal choice for organizations looking to empower a broader range of users to build and maintain data pipelines or AI workflows.

Real-time Monitoring and Alerts Within an Observability Architecture

Both Datavolo and Apache NiFi share the same real-time monitoring and alerts. The Datavolo team has extended NiFi’s native monitoring capabilities in service of GenAI workflows. Datavolo’s observability architecture is tailored to data engineers feeding GenAI applications.

Datavolo and Apache NiFi’s observability architecture provides real-time monitoring and alerts for comprehensive oversight of data pipelines in service of GenAI. This proactive approach keeps data flowing, minimizes downtime, and enhances reliability. One of the proprietary features that Datavolo supports is the export of NiFi telemetry as OpenTelemetry (aka “OTel”) metrics.

Integration with OpenTelemetry Collects Data for Cloud-Native GenAI Apps

Both Datavolo and NiFi leverage OpenTelemetry to enhance data collection capabilities and provide comprehensive observability of data pipelines. But what is OpenTelemetry?

Like Apache NiFi, OpenTelemetry is an open-source project. It offers three primary features–tracing, metrics, and log collection–which provide deep insights into application performance and behavior.

Datavolo supports all of the same telemetry collection methods as Apache NiFi, and we recommend its OpenTelemetry Reporting capability for vendor neutrality and enhanced security. Datavolo developed a capability to report all flow metrics in OpenTelemetry’s OTLP format. Our customers can monitor their Datavolo runtimes with their existing platforms or choose from any of the more than forty-five vendors that have adopted this industry standard.

By integrating Datavolo with OpenTelemetry logging, we allow data engineers to quickly detect and resolve issues with data flows. The integration ensures that all stages of the data pipeline, from ingestion to processing, are transparent and measurable.

Data Flow Authorship in Natural Language

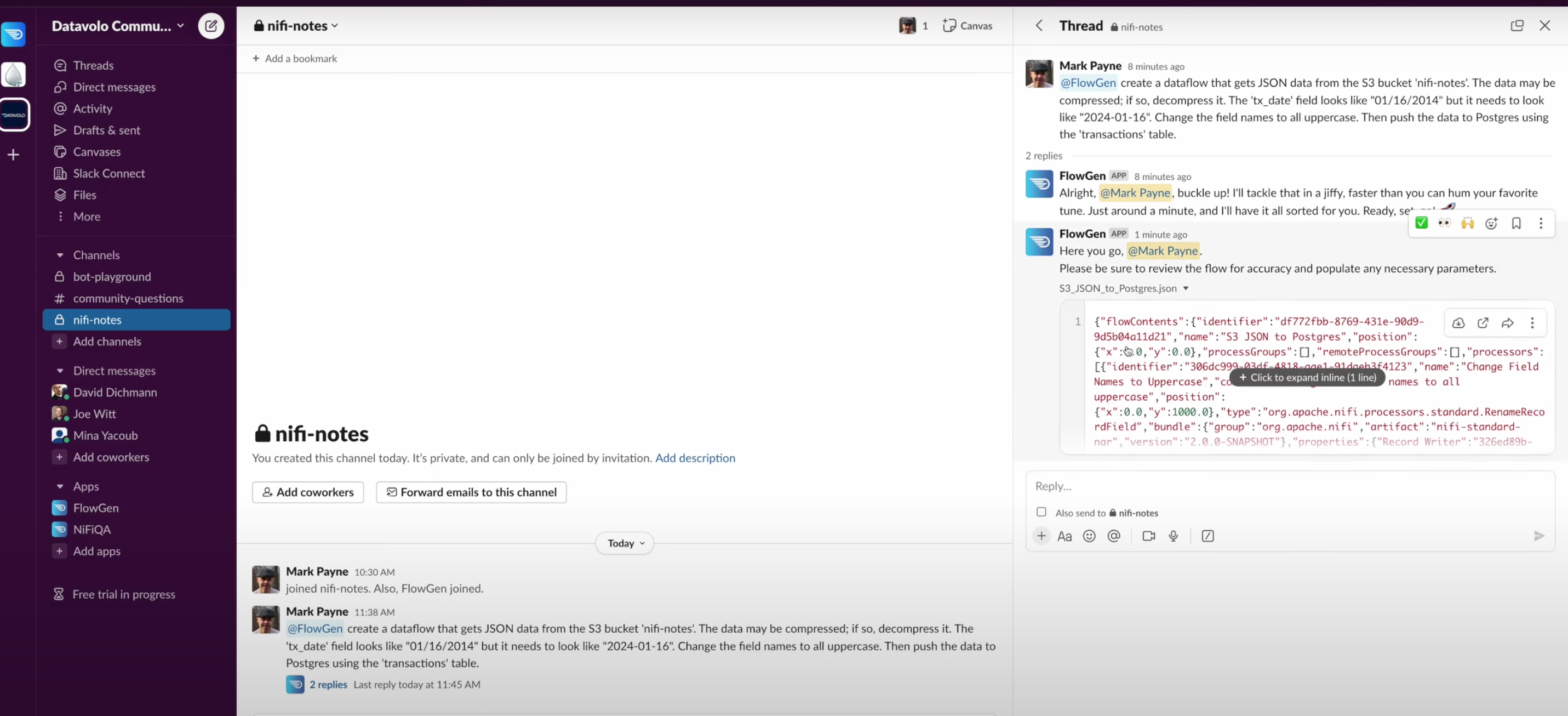

Datavolo’s Flow Generator is one part of the observability architecture that is unique to Datavolo and not included in open-source Apache NiFi. Flow Generator by Datavolo empowers data engineers to create data flows in minutes, using natural language commands. For example, in this demo video from January 2024, Apache NiFi co-creator and Datavolo engineer Mark Payne introduced our Flow Generator. Flow Generator turns data flow authorship that used to take months or weeks into jobs that take minutes or seconds.

Slack returns a flow that Mark then downloads and drops into his NiFi canvas to review. He quickly checks the flow for accuracy, configures it and sends it to Postgres, all in about two minutes. This same process used to involve lots of custom code and required days or weeks of work.

Flow Generator means that Datavolo clients can create GenAI models far more quickly. When data flow authorship becomes hundreds of times faster, data engineering teams can experiment more often. Unlike open-source Apache NiFi, which requires extensive manual configurations and lacks advanced NLP integration, Datavolo’s Flow Generator offers a seamless, automated process that uses natural language processing and is specifically designed to train GenAI.

Datavolo’s Advantages for Fueling GenAI Applications

The first big area of difference between Datavolo and Apache NiFi has to do with fueling GenAI applications. Remember, Apache NiFi was created at a time well before AI was in general, widespread use. Large language models require different features and benefits than the other legacy data flow use cases that NiFi was developed for, and still serves so well. We founded Datavolo for fuel Generative Applications.

From the beginning, our team built Datavolo to train LLMs using our clients’ proprietary multimodal data. Data engineers appreciate three Datavolo capabilities that NiFi lacks:

- AI and data engineering for advanced RAG pipelines;

- Scalable machine learning services for document intelligence; and

- Custom Python processors for extensibility of the AI technology stack.

AI and Data Engineering for Advanced RAG Pipelines Using Private Data

There’s good news and bad news about using public data to train proprietary AI applications. The good news is that the data is freely available. That’s also the bad news. When everyone trains models on the same public data, those models look and act the same. Private data is the key to differentiation in a world where suddenly everyone “does AI”. To create a unique AI product or experience requires unique private data, and our advanced RAG pipelines make that easy. In fact, only Datavolo comes with the specific processors for parsing unstructured documents, chunking data, embedding chunks, and integrating those chunks into vector databases.

At Datavolo, we think a lot about “chunking data”. In basic RAG pipelines, data scientists and data engineers embed a big text chunk for retrieval and synthesis. But a big chunk will include more junk. For example, if you trained a model on the entire Moby Dick novel, AI might tell you it was a book about a boat. Focus on a particular chapter or page where the great white whale sinks The Pequod, and the summary becomes more accurate and useful.

Here’s some more good news: most companies generate enough data to train their own unique AI models. In fact, we’ve found that huge global companies can become paralyzed by having too much private unstructured data. It’s hard for them to prioritize and commit because they don’t know where to chunk. This paralysis is especially acute when mapping data flows and ETL pipelines requires a lot of time and effort.

Datavolo simplifies the conundrum at exactly the place where AI and data engineering meet. We recommend that data engineers focus on Retrieval Augmented Generation (RAG). For newcomers, here’s a definition of RAG:

Retrieval Augmented Generation (RAG) is an AI technique combining document retrieval and text generation. It enables models to generate accurate, context-rich responses by retrieving relevant information from external data sources and integrating it into the generated output. This approach enhances the quality and relevance of AI-generated content.

The words “context-rich” and “relevance” are key. To put RAG in the simplest way possible, general unstructured data generates general AI results. Specific, private, context-rich, relevant unstructured data trains models that provide far more better results. While Apache NiFi easily handles diverse and complex data types, only Datavolo’s specialized tools streamline the process of incorporating a company’s private data into what become extraordinarily precise AI applications that only that company can offer.

Back to that big whale. The purpose of RAG for GenAI is finding relevant chunks of text to send to the LLM. This is where AI and data engineering come together. The key is to point NLP at the most relevant chunks to find the best pieces of text. At Datavolo, we’ve found that an advanced RAG pattern called “Small-to-Big” is most effective, and we’ve partnered with companies like Pinecone and LangChain to make Small-to-Big work, enabling retrieval on smaller, more semantically precise chunks.

Scalable Machine Learning Services for Document Intelligence

Datavolo provides scalable machine learning services tailored for the parsing and extraction of complex documents. Datavolo uses state-of-the-art Natural Language Processing (NLP) and computer vision (CV) models to parse and extract complex data from unstructured sources like PDFs, images, and scanned documents. Datavolo is also building fine-tuning capabilities that will support the end user’s ability to tune their CV-powered parsing models. This low-code interface will be extensible to the many document types in a modern enterprise.

For RAG pipelines where smart chunking is critical, Datavolo’s parsing processors derive important structural document metadata, such as whether a piece of text is a title, sub-title, body of text within a section, or a footnote. These derived metadata are used downstream to intelligently chunk the data into smaller pieces that are appropriate to pass as inputs to embedding models. This is consistent with the Small-to-Big approach described above.

Once Datavolo parses documents and transforms the text into Datavolo’s canonical representation, data engineers can test the quality of extraction using a defined schema and data quality rules. They can also use this parsed representation to detect PII and assure that sensitive data is not passed downstream to vector databases or language models. This includes named-entity recognition and extraction of objects within those documents, such as: dates, people, products, and financial figures. This is another way that the platform supports secure and scalable machine learning fed by a company’s private data streams.

Data teams familiar with Apache NiFi’s record-oriented processing can think about these document representations as a new entity type. Going forward, Datavolo will continue to deliver document-oriented processors that will natively read and write these document types to power RAG pipelines and AI applications. Apache NiFi does not natively include document-oriented processors specifically designed for this sort of document intelligence.

Custom Python Processors for Extensibility of the AI Technology Stack

By allowing data engineers to create custom Python processors, Datavolo enables the integration of specialized machine learning workflows directly within the AI technology stack. This extensibility is crucial for customizing data preprocessing, feature extraction, and model training tasks to specific GenAI requirements. Custom Python processors can be used to implement advanced Natural Language Processing (NLP) algorithms, leverage pre-trained models, or fine-tune models with unique private datasets.

Open-source Apache NiFi offers extensibility through custom processors as well, but it primarily relies on Java-based development. While NiFi supports scripting through its ExecuteScript processor, which can run Python, Groovy, or other scripts, the process is less streamlined than Datavolo’s native support for Python, limiting the extensibility of AI technology stacks that do not include Datavolo.

Datavolo’s approach simplifies the integration of Python-based machine learning libraries and frameworks, making it more accessible and efficient for data scientists working in Python. The resulting ease of extensibility with custom Python processors makes Datavolo a more adaptable and powerful platform for training and deploying advanced GenAI models, as compared to open-source Apache NiFi.

Deployment and the Software Development Lifecycle (SDLC)

The second big area of difference between NiFi and Datavolo is Datavolo’s cloud-native, containerized data flow architecture. Our secure containerization promotes the microservice observability expected for modern SaaS platforms and apps. Datavolo’s cloud-native architecture saves the time normally lost deploying or integrating services with legacy technologies that lack cloud-native scalability and flexibility. Datavolo’s approach to IT operations is simple: a data flow architecture must be fast and easy to deploy, integrate, and maintain. Our architecture supports scalability, reliability, and security for GenAI workflows.

Production-Ready GenAI Integrations

Datavolo excels in integrating into the GenAI and Big Data processing ecosystems, enhancing its ability to train advanced AI models. Datavolo seamlessly connects with a variety of data sources and machine learning frameworks, such as TensorFlow, PyTorch, Pinecone, and LangChain, making data flow and model training more efficient.

A broad range of easy integration options allows data engineers to connect with their existing big data infrastructures, streamline data preprocessing, and apply sophisticated AI algorithms directly within the Datavolo platform. Because Datavolo is cloud-native and containerized, it is seamlessly interoperable with modern cloud services, databases, and data lakes. This ensures that Datavolo is forward-compatible with the growing diversity in the complex datasets needed to train LLMs for GenAI.

Open-source Apache NiFi does provide strong integration capabilities, some of which Datavolo inherits. Remember: Datavolo is Apache NiFi, but NiFi is not Datavolo. NiFi can interface with various data sources and Big Data processing systems. It supports integrations through its extensive library of connectors and processors. Datavolo inherits all of those capabilities.

However, Apache NiFi lacks the out-of-the-box machine learning integration that comes with Datavolo. That means additional work for setup and customization in standalone NiFi, before connecting with AI frameworks. Datavolo’s direct support for GenAI and big data ecosystems, combined with its user-friendly interface and Python extensibility, makes it a more comprehensive solution for organizations aiming to train and deploy advanced AI models on Day 1.

Secure Containerization Promotes Microservices Observability

In his 2023 article, “6 Observability Design Patterns for Microservices Every CTO Should Know,” author Hiren Dhaduk puts his finger on the important connection between, “Observability in microservices discovers unknown issues between various service interactions to build a resilient and secure app.” We couldn’t agree more.

Here’s how we recommend combining components in a modern, open, data flow architecture that will give data engineers the microservices observability they need:

- Apache NiFi handles data ingestion, transformation, and routing,

- Distributed Tracing tools (like Jaeger or Zipkin) provide visibility into NiFi’s interactions with microservices, tracking data flow and processing times.

- Microservices Observability platforms (such as Prometheus and Grafana) monitor the performance and health of NiFi and associated services.

- Containerization (using Docker and Kubernetes) ensures that NiFi and microservices run in isolated, reproducible environments, to allocate resources efficiently.

In fact, when Citi Ventures announced their investment in Datavolo’s Series A funding, the investors cited security, governance and observability as some reasons for their investment:

“Datavolo also provides APIs to support advanced retrieval-augmented generation (RAG), embedding model integrations and vector databases, and has centralized security, governance and observability capabilities — all of which are top-of-mind for enterprises looking to build GenAI applications.”

Datavolo provides a secure, containerized version of Apache NiFi to be deployed in SaaS or Bring Your Own Cloud form factors (including Private Cloud Kubernetes environments). While open-source Apache NiFi does support Docker and Kubernetes for containerization, you have to do it yourself. Datavolo emphasizes turnkey solutions with integrated container orchestration. Kubernetes manages Datavolo’s containerized services, and we focus our product strategy on ease of deployment and management.

Apache NiFi offers security capabilities for a world before containerization. Datavolo inherits these and they include:

- SSL/TLS for data transmission;

- User authentication and authorization via LDAP or Kerberos; and

- Fine-grained access control and multi-tenant support.

Additionally, Datavolo includes features for security in a cloud-native, containerized world:

- Built-in encryption for data in transit and at rest;

- SaaS-specific security features; and

- Advanced threat detection and compliance support.

Datavolo has a Cloud-Native Architecture. Apache NiFi Does Not.

The term “cloud native” was first used around 2013 when Netflix discussed their web-scale application architecture at a 2013 AWS re:Invent talk. Datavolo founders architected “NiagaraFiles” before that and only donated it to the Apache Software Foundation from the NSA in 2014, through the NSA Technology Transfer Program. Translation: Apache NiFi was created in a time before widespread commercial adoption on cloud-native architectures. It wasn’t a thing yet.

This makes Datavolo’s cloud-native architecture very different from NiFi approach. When you see discussion of Apache NiFi in conjunction with “cloud-native”, you’ll also find words like, “Can be…”

Can be containerized.

Can be made to be scalable.

Can be deployed on public cloud platforms using Kubernetes or cloud-specific orchestration tools.

Datavolo is cloud-native.

This is important because a data flow architecture must be extensible and future-facing or it will lag behind. Datavolo’s cloud-native architecture assures:

- Scalability to ramp or reduce cloud resources, based on demand, optimizing performance and minimizing cost;

- Resilience through fault tolerance and automatic recovery, providing continuous availability and reliability; and

- Integration with other cloud services and microservices, facilitating efficient multimodal data flows and interoperability across diverse IT ecosystems.

Secure Management for Custom Code

Custom code represents a big enterprise security risk. Our team described this risk in an aptly-titled post: “Custom code adds risk to the enterprise”. Here’s the problem. An AI technology stack must be dynamic, always changing in sync with its data and compute ecosystem. This makes custom code inevitable, and useful. But it still poses a security risk.

Compared to NiFi, Datavolo’s automated processes offer a more streamlined approach to minimizing technical debt and securing custom integrations. Datavolo emphasizes secure and compliant integration of custom code with built-in tools for automated scanning and validation. This makes it easier to remove custom code that may pose a security risk. Datavolo’s cloud-native approach provides continuous updates and automated maintenance, minimizing technical debt and simplifying code management.

We Love Apache NiFi.

Datavolo Puts it to Work for GenAI.

Datavolo’s founders built Apache NiFi. We love it. When we compare Datavolo to Apache NiFi, we’re looking in the mirror. Datavolo includes NiFi.

Because we know NiFi so well, we could not see the unique opportunities and challenges that would come with using it to train Generative AI. We started Datavolo to meet those challenges.

For many, many use cases and patterns of use, open-source Apache NiFi will be great.

For GenAI application, Datavolo will work better and more efficiently.

We’d love to show you how, so book some time with us to discuss whether Datavolo or Apache NiFi is right for your needs.