You did it! You finally led the charge and persuaded your boss to let your team start working on a new generative AI application at work and you’re psyched to get started. You get your data and start the ingestion process but right when you think you’ve nailed it, you...

Data Engineering

Onward with ONNX® – How We Did It

Digging into new AI models is one of the most exciting parts of my job here at Datavolo. However, having a new toy to play with can easily be overshadowed by the large assortment of issues that come up when you’re moving your code from your laptop to a production...

Tutorial – How to Convert to ONNX®

Converting from Pytorch/Safetensors to ONNX® Given the advantages described in Onward With ONNX® we’ve taken the opinion that if it runs on ONNX that’s the way we want to go. So while ONNX has a large model zoo we’ve had to convert a few models by hand. Many models...

Secure Data Pipeline Observability in Minutes

Monitoring data flows for Apache NiFi has evolved quite a bit since its inception. What started generally with logs and processors sprinkled throughout the pipeline grew to Prometheus REST APIs and a variety of Reporting Tasks. These components pushed NiFi closer to...

How to Package and Deploy Python Processors for Apache NiFi

Introduction Support for Processors in native Python is one of the most notable new features in Apache NiFi 2. Each milestone version of NiFi 2.0.0 has enhanced Python integration, with milestone 3 introducing support for loading Python Processors from NiFi Archive...

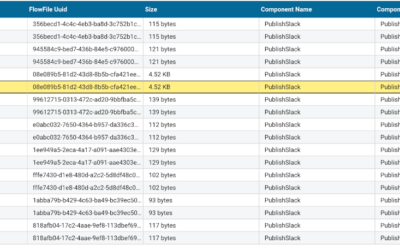

Troubleshooting Custom NiFi Processors with Data Provenance and Logs

We at Datavolo like to drink our own champagne, building internal tooling and operational workflows on top of the Datavolo Runtime, our distribution of Apache NiFi. We’ve written about several of these services, including our observability pipeline and Slack chatbots....

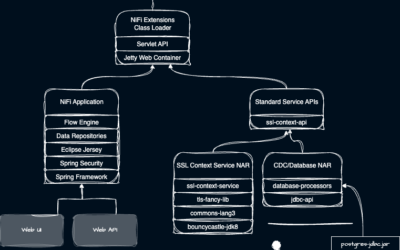

Apache NiFi – designed for extension at scale

AI systems need data all along the spectrum of unstructured, structured, and multi-modal. The protocols by which these diverse types of data are both acquired and delivered are as varied as the data types themselves. At the same time data volumes and latency requirements grow ever stronger which demands solutions which scale down and up first – then out. In other words we need maximum efficiency, we can’t resort to remote procedure calls for every operation, and we need to support hundreds if not thousands of different components or tools in the same virtual machine.

Data Pipeline Observability is Key to Data Quality

In my recent article, What is Observability, I discussed how observability is crucial for understanding complex architectures and their interactions and dependencies between different system components. Data Observability, unlike Software Observability, aims to...

Building GenAI enterprise applications with Vectara and Datavolo

The Vectara and Datavolo integration and partnership When building GenAI apps that are meant to give users rich answers to complex questions or act as an AI assistant (chatbot), we often use Retrieval Augmented Generation (RAG) and want to ground the responses on...

Datavolo Announces Over $21M in Funding!

Datavolo Raises Over $21 Million in Funding from General Catalyst and others to Solve Multimodal Data Pipelines for AI Phoenix, AZ, April 2, 2024 – Datavolo, the leader in multimodal data pipelines for AI, announced today that it has raised over $21 million in...