Introduction

OpenTelemetry has become a unifying force for software observability, providing a common vocabulary for describing logs, metrics, and traces. With interfaces and instrumentation capabilities in multiple programming languages, OTel presents a compelling solution to application monitoring challenges across diverse systems. Recent releases of Apache NiFi introduced OpenTelemetry collection capabilities using the ListenOTLP Processor.

As a scalable system for data processing and distribution, Apache NiFi has supported log collection, filtering, and routing with a variety of formats for many years. From schema-oriented binary records to unstructured multimedia streams, the core architecture of NiFi enables a wide variety of use cases. The first milestone release of NiFi 2.0.0 presents another example of platform adaptability. With the introduction of a new Processor to collect OpenTelemetry records, recent NiFi releases provide full support for data collection according to the OpenTelemetry Protocol Specification.

The ListenOTLP Processor provides flexible and secure collection capabilities for easy integration with OpenTelemetry pipelines. To understand the basic features of NiFi OpenTelemetry collection, what better source of data than NiFi itself?

Processor Configuration

The first step for building a telemetry collection pipeline in NiFi involves configuring the ListenOTLP Processor.

ListenOTLP includes convenient default values for most properties. The Processor requires TLS encryption for all communications, protecting the content of telemetry during transmission. For this reason, ListenOTLP requires configuring an SSL Context Service.

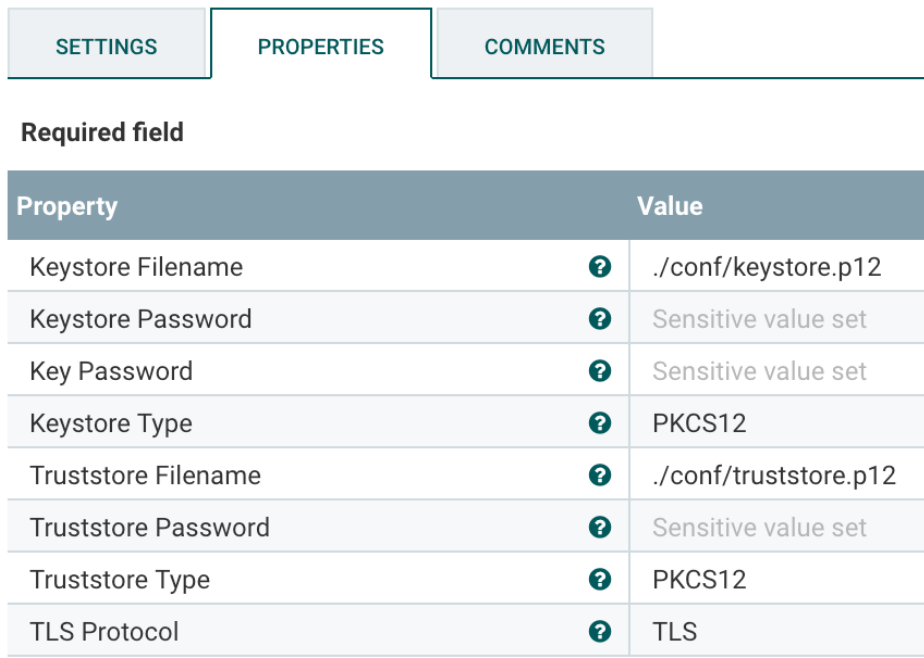

SSL Context Service Configuration

The SSL Context Service requires a Keystore and Keystore Password containing a server certificate and private key, as well as a Truststore and Truststore Password containing one or more certificate authorities.

Production deployment of Public Key Infrastructure is beyond the scope of current consideration, but for most deployments, the SSL Context Service can use the same Keystore and Truststore configured in NiFi application properties. The default installation of NiFi generates a server certificate and private key, storing the applicable information in standard Keystore and Truststore files.

The nifi.properties configuration in the conf directory of a standard installation contains the standard Keystore and Truststore settings.

The Keystore information is available in the following application properties:

nifi.security.keystore

nifi.security.keystoreType

nifi.security.keystorePasswd

nifi.security.keyPasswd

The Truststore information is available in the following application properties:

nifi.security.truststore

nifi.security.truststoreType

nifi.security.truststorePasswd

These application properties provide values that correspond to SSL Context Service properties. The ListenOTLP Processor is ready to use after configuring the properties and enabling the SSL Context Service.

Counting OpenTelemetry Files

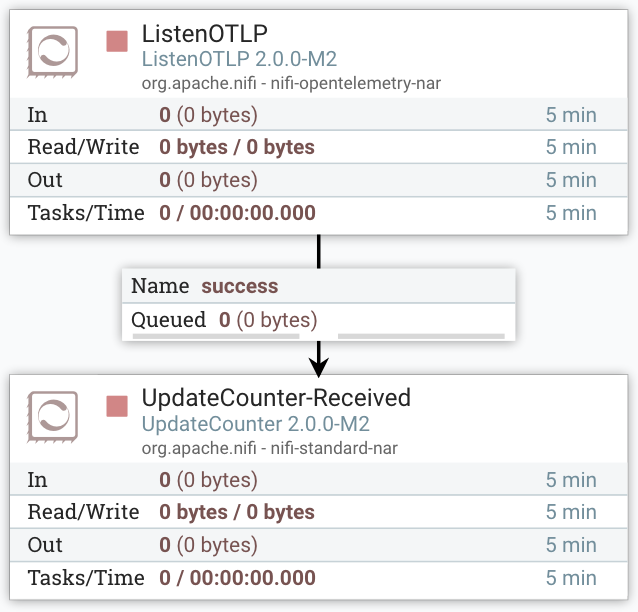

The success relationship of ListenOTLP controls the destination for OpenTelemetry records received. There are many possible options to consider, but for initial pipeline configuration, the UpdateCounter Processor is a straightforward next step.

The Counter Name property in UpdateCounter supports tracking the number of FlowFiles received. Setting the Counter Name to a value such as received provides basic accounting for FlowFiles that ListenOTLP produces. The success relationship of UpdateCounter should be connected to additional components, but it can remain disconnected for initial pipeline evaluation.

With ListenOTLP configured with an SSL Context Service and connected to UpdateCounter, start the ListenOTLP Processor to begin listening for OpenTelemetry records.

Apache NiFi and OpenTelemetry Instrumentation

As one of many integration options, OpenTelemetry provides a Java agent for automatic instrumentation of any Java application. The agent supports a number of configuration options that describe the application being instrumented, the telemetry to be collected, and the endpoint destination.

The NiFi bootstrap configuration supports specifying OpenTelemetry agent properties to enable automatic instrumentation.

Before continuing the configuration, download the opentelemetry-javaagent.jar to the root directory of the NiFi installation. The agent JAR can be located anywhere on the filesystem that will persist across system restarts.

Certificate Formatting

Sending telemetry to ListenOTLP requires configuring the agent with the certificate authority that issued the server certificate configured in SSL Context Service for the Processor. The OpenTelemetry agent requires certificates to be formatted as PEM files.

The OpenSSL command supports extracting certificates from the NiFi application truststore formatted as PKCS 12 using the following command:

openssl pkcs12 -in truststore.p12 -out trust.pem

The OpenTelemetry agent properties must include a reference to trust.pem.

Bootstrap Configuration for NiFi and OpenTelemetry

NiFi supporting specifying custom Java arguments using properties in the bootstrap.conf configuration. Custom Java argument property names must begin with java.arg and must have a unique name in the configuration.

The OpenTelemetry agent recommends a Service Name property to identify the application being instrumented. The Service Name describes the application and should consist of a short label such as nifi-1 for identification and differentiation from other services.

With the opentelemetry-javaagent.jar in the NiFi root directory and the trust.pem in the NiFi conf directory, configure the following properties in bootstrap.conf to enable automatic instrumentation:

java.arg.otelAgent=-javaagent:./opentelemetry-javaagent.jar

java.arg.otelServiceName=-Dotel.service.name=nifi-1

java.arg.otelCertificate=-Dotel.exporter.otlp.certificate=./conf/trust.pem

java.arg.otelEndpoint=-Dotel.exporter.otlp.endpoint=https://localhost:4317

The default agent configuration enables logs, metrics, and traces based on supported libraries. For OpenTelemetry collection limited to logs, it is possible to disable metrics and traces using the following properties in bootstrap.conf:

java.arg.otelTracesExporter=-Dotel.traces.exporter=none

java.arg.otelMetricsExporter=-Dotel.metrics.exporter=none

Applying bootstrap configuration and enabling OpenTelemetry instrumentation requires restarting the NiFi system.

OpenTelemetry Collection

The ListenOTLP Processor supports both gRPC and HTTP with Protobuf or JSON on the same port using HTTP content negotiation. This content negotiation approach avoids the need to open multiple ports for different transport formats and simplifies system configuration. The OpenTelemetry agent uses gRPC as the default protocol for transmission.

Regardless of the transport format, ListenOTLP produces FlowFiles containing JSON encoding representations of telemetry. The JSON structure enables subsequent NiFi processing using available JSON Processors and Controller Services.

ListenOTLP writes several standard FlowFile attributes for general routing. The resource.type attribute contains the type of OpenTelemetry records included, either LOGS, METRICS, or TRACES. The resource.count property indicates the resource records included in the FlowFile. Each OpenTelemetry resource can contain multiple objects, depending on the volume and periodicity of data collection.

Access Logging with NiFi and OpenTelemetry

Collecting OpenTelemetry information presents many potential use cases including aggregation, alerting, and archival. Selecting NiFi HTTP access logs for additional processing illustrates one solution for auditing access to the NiFi user interface and REST API.

Logback is one of several logging libraries supported using OpenTelemetry automatic instrumentation. HTTP access logging in NiFi uses a standard logger named org.apache.nifi.web.server.RequestLog when writing HTTP requests.

The default NiFi configuration uses the de facto standard Combined Log Format for HTTP requests, which includes the client address, username, and path requested. Together with user agent information, these attributes provide insight into client behavior.

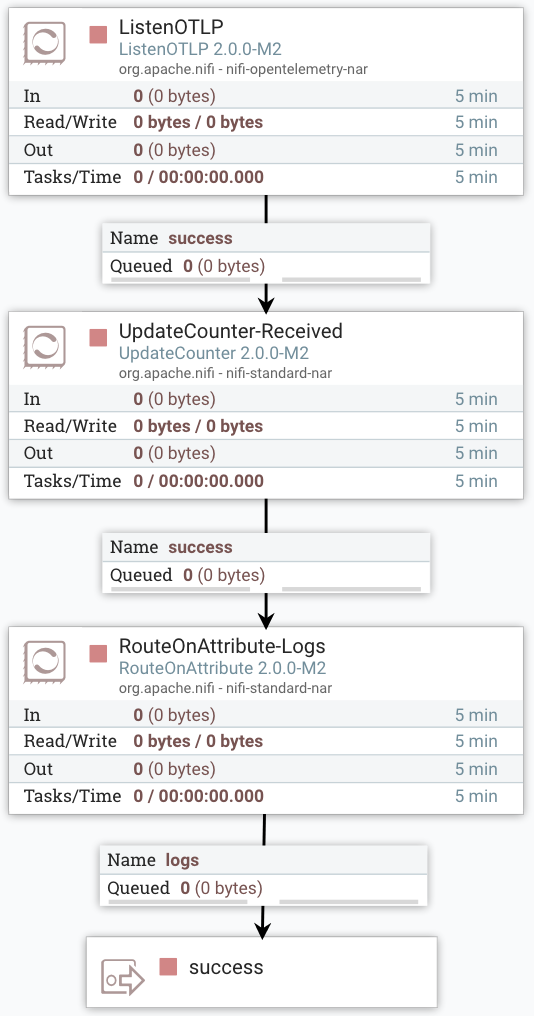

OpenTelemetry Log Routing

Selecting OpenTelemetry logs is the first step to processing HTTP access logs. The RouteOnAttribute Processor supports FlowFile routing based on a configurable statement defined using NiFi Expression Language. Adding a property named logs with the following expression as the value enables routing to a named relationship:

${resource.type:equals('LOGS')}

The logs property defines a new relationship for the RouteOnAttribute Processor that enables connecting to another Processor for subsequent filtering.

The OpenTelemetry logs format nests log messages within several layers of parent objects. The OpenTelemetry JSON examples directory includes log collection with metadata and message information. For HTTP access logs in NiFi, the following JSON shows the location of the message itself within the standard hierarchy.

{

"resourceLogs": [

"scopeLogs": [

{

"scope": {

"name": "org.apache.nifi.web.server.RequestLog"

},

"logRecords": [

{

"body": {

"stringValue": "127.0.0.1 - user"

}

}

]

}

]

]

}

For organized processing, a Process Group with an Output Port provides a consolidated location for initial OpenTelemetry collection. Creating an Output Port named success provides a clear destination for OpenTelemetry Logs, which can be connected to another Processor or Process Group for subsequent filtering.

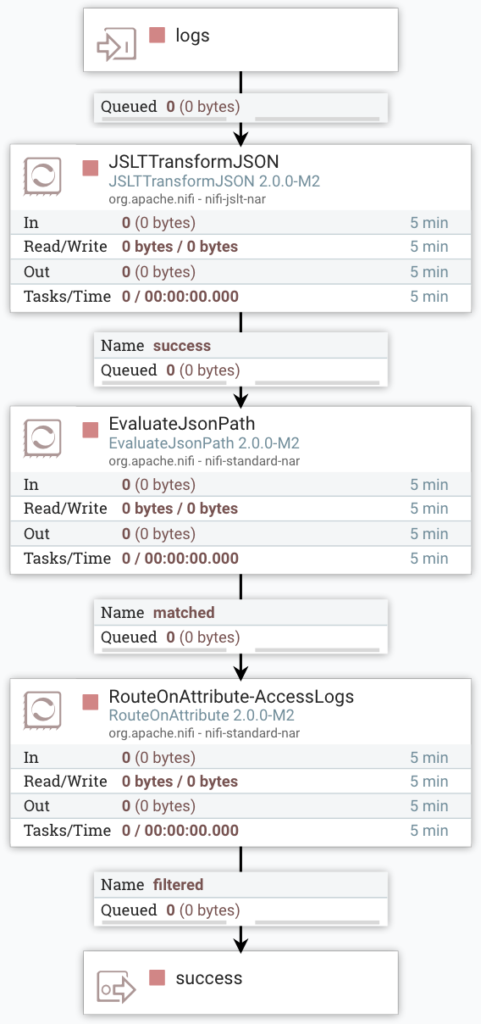

JSON Filtering and Formatting

NiFi provides several options for transforming JSON. The hierarchical structure of OpenTelemetry Logs presents a challenge for selective filtering, but the JSLTTransformJSON Processor provides one solution using the JSLT domain-specific language.

With inspiration from XML processing languages like XPath and XQuery, JSLT supports JSON transformation using a combination of basic functions and conditional grammar. The JSLT demo playground provides an interactive application for writing and evaluating transformations.

OpenTelemetry Logs can contain one or more objects in the scopeLogs array, with each element containing a scope object having a name field corresponding to the Logback logger. For the purpose of selecting only NiFi HTTP access logs, the name field supports basic filtering. For initial processing, it is useful to retain the OpenTelemetry resource information in the original structure collected, and JSLT supports an expressive way to both retain the structure and filter on a specific scope name.

JSLT Transformation for NiFi and OpenTelemetry Logs

Configure the following specification in the JSLT Transformation property of the JSLTTransformJSON Processor to produce filtered results.

{

"resourceLogs": [

for (.resourceLogs)

{

"resource": .resource,

"scopeLogs": [

for (.scopeLogs) .

if (

.scope.name == "org.apache.nifi.web.server.RequestLog"

)

]

}

]

}

The JSLT expression contains a hybrid representation of JSON with loops and conditional filtering. The root resourceLogs field matches the root field name in OpenTelemetry log collection. The next line declares a loop over the original resourceLogs field to produce a new array. The body of the main loop declares a destination object structure consisting of a resource field and a scopeLogs field. The resource field redeclares the original resource element at the level.

The scopeLogs element declares a new array built from a loop over the original scopeLogs field. The period character after (.scopeLogs) indicates that the current array element should be used in the new array. The conditional following the loop implements the logger filtering, requiring that the nested scope object have a name field with a value of org.apache.nif.web.server.RequestLog to be included in the new array.

The net result of the transformation is an OpenTelemetry Log resource containing logs limited to NiFi HTTP request information.

The Transformation Strategy property in the JSLTTransformJSON Processor must be set to Entire FlowFile for proper expression evaluation. Keep the remaining properties unchanged.

Following JSON transformation to remove logs that do not match the selected scope name, the resulting JSON still contains resource metadata fields. This information is not required for subsequent processing as it is repeated in each FlowFile. At this point, FlowFiles that do not contain HTTP logs can be dropped. Combining JSON path evaluation and attribute routing enables dropping filtered FlowFiles.

FlowFile Filtering

The EvaluateJsonPath Processor supports executing one or more JSON path expressions against FlowFile JSON contents. The Processor supports setting FlowFile attributes based on custom property names when the Destination field is set to flowfile-attribute.

Based on the JSON structure of OpenTelemetry Logs, evaluating the length of the logRecords array indicates whether the JSLT transformation retained or removed records. The following JSON path expression returns the array length of the nested logRecords field.

$..logRecords.length()

Using a property named logRecords having the specified path expression configures the Processor to set the logRecords FlowFile attribute to a number greater than zero.

The RouteOnAttribute Processor supports the final step of initial processing. Configure a cutom property named filtered having a value that uses Expression Language to determine whether the logRecords attribute is greater than zero.

${logRecords:gt(0)}

Filtering logs down to HTTP request information provides the foundation for subsequent processing. Options include sending OpenTelemetry to persistent storage or parsing of the access log fields.

Conclusion

Logging is just one of the three primary features of OpenTelemetry, but it provides a helpful introduction to the power of OpenTelemetry automatic instrumentation. Combining Apache NiFi and OpenTelemetry collection makes it possible to implement strategic monitoring using robust components and focused transformation definitions. Deploying NiFi pipelines for collection and processing enables engineers to create scalable observability solutions that bridge the gap between historical approaches and modern technologies. Building on an extensible framework not only solves current problems, but paves the way for integration with future advances in artificial intelligence and beyond.