Solution Walkthrough: Advanced Document Processing

Making financial 10Ks useful for AI apps has become the hello world of GenAI demos.

It is a meaningful starting point as 10Ks are large, complex documents which contain diverse content including text, tables, and figures.

Moreover, the text content spans across the document layout, and is present in titles, section titles, the text body, footnotes, etc.

In order to make these complex documents useful for AI apps, data engineers must execute several steps: acquisition, parsing and layout detection–including extraction of tabular data and figures–cleansing and transformation, chunking, embedding, and writing to retrieval systems. In the enterprise setting, each of these steps will need to be scalable, continuous, and governed. In this solution walkthrough, we will explore each of these steps in depth and how Datavolo empowers data engineers to deliver these solutions in a way that meets these enterprise objectives.

Acquisition

The applications that produce data are fundamentally different from the analytical and predictive applications that consume data. Think about an AI app and the data in systems of record that fuel it. These differences relate to the location of this data within companies’ networks and data platforms as well as the form that this data takes across these systems. Getting data to the right system, in the right form, at the right time is critical for the success of analytical and AI apps.

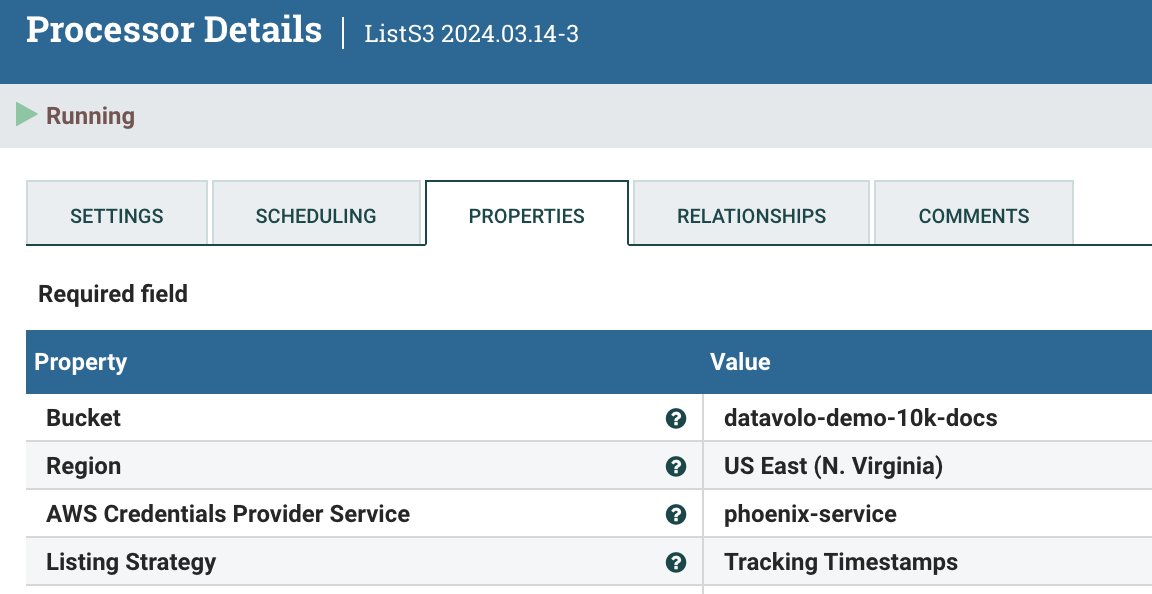

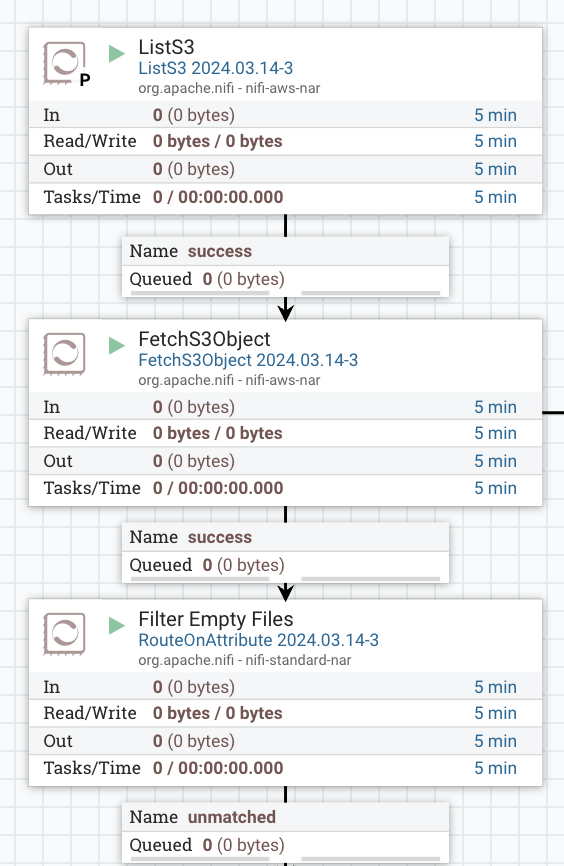

To get started, data engineers need to be able to easily acquire data from external systems in a secure and scalable way. With Datavolo’s out-of-the-box processors supporting a large variety of sources, List/Fetch pattern for scalability, and Tracking Strategy for recognizing and processing new events as they arrive, data engineers are set up for success from the get-go.

Data engineers do not want to have to write and maintain new custom code for every integration that is required for their use cases. They also do not want to have to worry about scalability and they want it to be easy to handle errors via retry logic, backpressure, or other techniques. Datavolo empowers data engineers by shipping with a large number of integrations out of the box, providing a continuous and automated platform that scales with high volume ingestion, and by supporting many patterns to handle errors when the reality of their data differs from the blue sky path.

Parsing

As AI makes more varied forms of data increasingly useful for analytics and making predictions about the future, the nature of data that data engineers must know how to process is skewing increasingly towards unstructured and multimodal data. Much of this complex, unstructured data is difficult to make useful without sophisticated approaches. Datavolo uses ML models throughout the pre-processing layer to enhance data engineers’ capabilities in driving high value outcomes using this unstructured data.

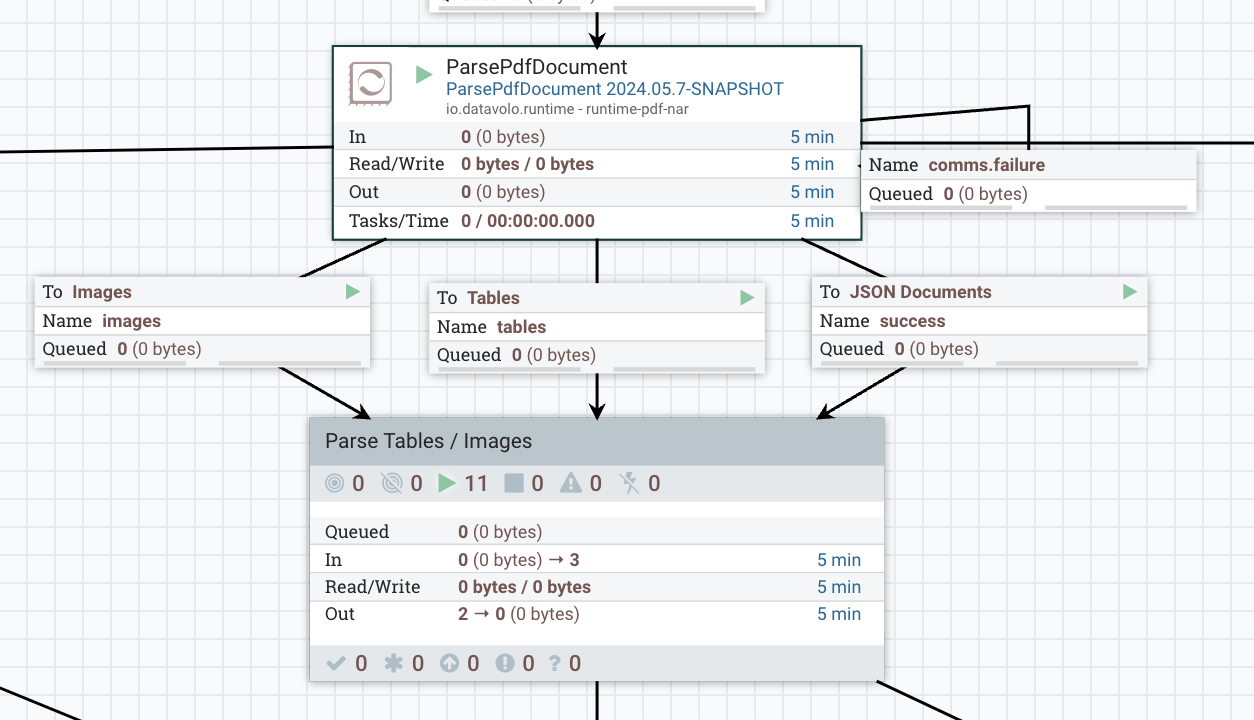

In particular, for parsing complex PDFs, Datavolo has released several new services:

- A layout detection service for PDFs, a custom trained version of the YOLOX-m model on DocLayNet dataset, that derives bounding boxes and labels for every component of the document, including tables, images, and structural characteristics of the text to distinguish between titles, sections, footnotes, etc.

- This layout detection service outputs a JSON representation of the raw document, and supports routing each labeled component to different downstream processors, such as routing components labeled as tables to the table parsing service

- A table parsing service, built on Microsoft’s Table Transformer model, that extracts rows, columns, and elements from embedded tabular data with the correct data types for downstream processing by language models

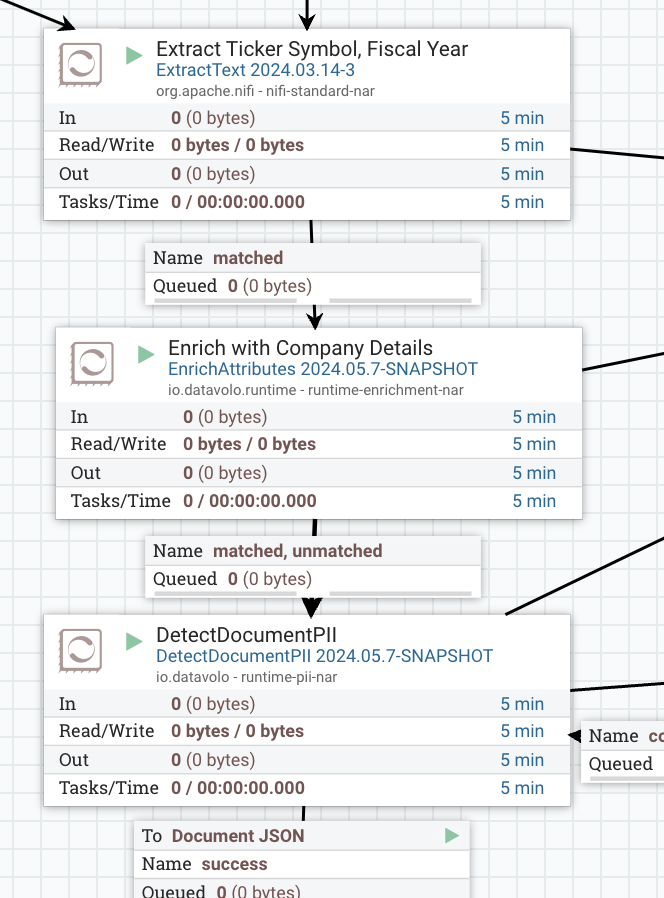

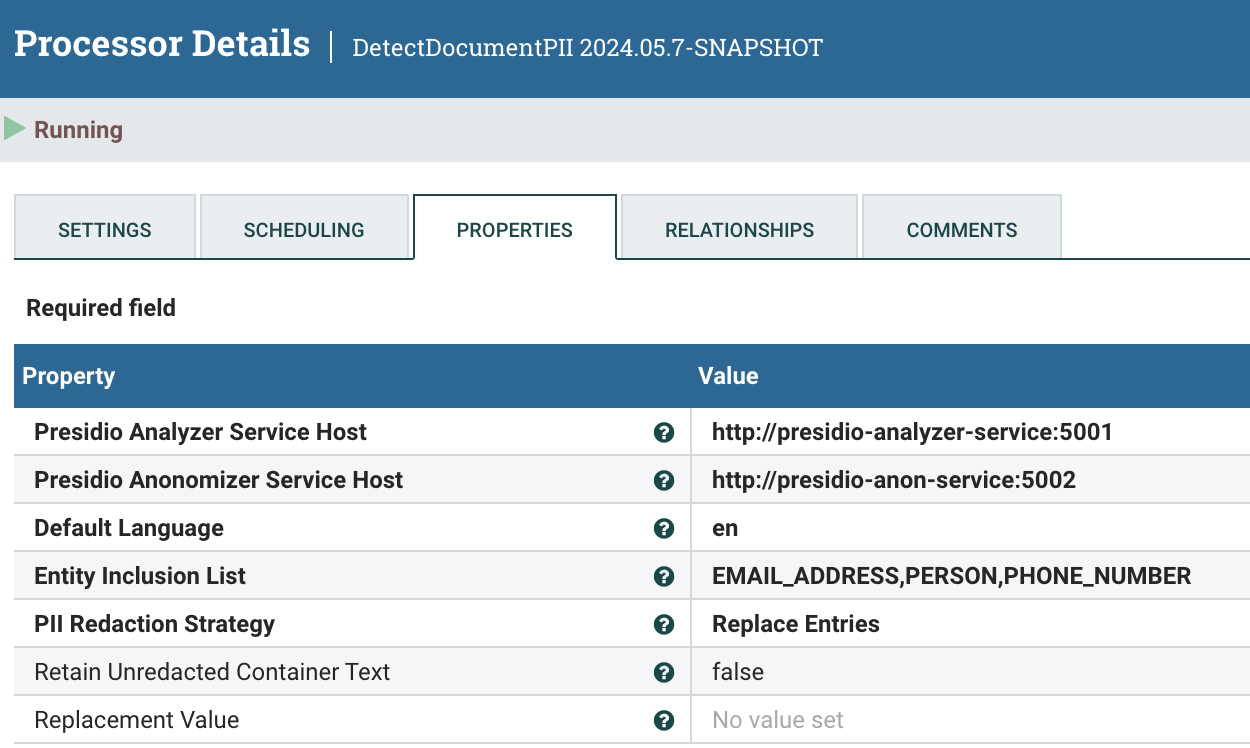

- A PII detection and redaction service, built on Microsoft’s Presidio model, to recognize and remove or mask sensitive entities, providing appropriate guardrails to prevent such data being sent downstream

- Supporting processors to merge the processed document elements back into the JSON representation of a parsed document

Cleansing and Transformation

After parsing, data engineers need to clean and transform the derived JSON representation of the raw document to make it useful for downstream consumption. Some examples of cleansing could be removing white spaces, special characters like newlines, removing footnotes, and artifacts like bad unicode characters.

Transformation is all about ensuring that data arrives in the right form when it is written to the downstream retrieval and search systems. In fact, with the rise of multimodal data, the importance of data transformation during pre-processing has re-emerged. The common ELT pattern seen in data engineering pipelines that move structured, row-based data from databases to data warehouses does not work well in the multimodal setting, as data must be transformed prior to its landing in retrieval systems like vector databases.

In particular, one of the key transformation steps is to enrich text chunks with context from outside of a given document, for example, using company-level attributes associated with the financial 10K in question. We’re releasing a new EnrichAttributes processor to make it easy for data engineers to supplement parsed documents with additional, external metadata.

Chunking

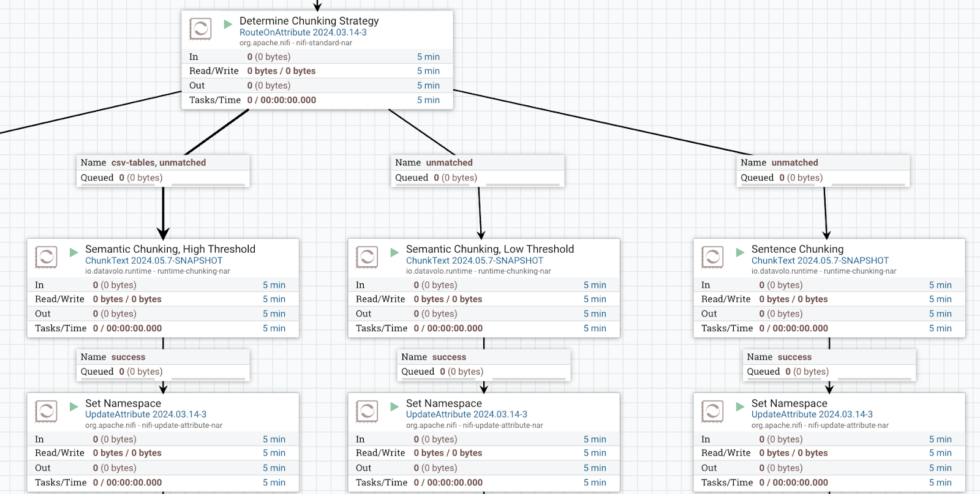

One of the areas that data engineers spend considerable time on when pre-processing complex, unstructured data in order to generate useful embeddings is the chunking strategy. Embeddings are widely used for retrieval use cases to support RAG patterns for LLM integration, and can also be used for tasks like document clustering. There are varied approaches available, ranging from naively splitting a large document five-hundred characters at a time, to advanced semantic-based chunking strategies driven by embeddings and cosine similarity techniques.

In general, there is a balancing act that the data engineer must maintain between embeddings with sufficient semantic precision, driving effective retrieval, and text chunks with sufficient richness for the generation step when these chunks are passed to a language model. Moreover, with complex PDFs, unlike HTML or markdown documents, there is no structural markup that distinguishes different parts of the document. Therefore, the ML-derived labels, discussed above, which distinguish structural characteristics of text throughout the document become very important for downstream chunking.

Datavolo has shipped several new processors for implementing advanced chunking strategies, as well as patterns which enable A/B testing across combinations of different chunking and parsing strategies. We’ve found that patterns that combine both structural and semantic chunking, recursively, work best for classes of documents like financial 10Ks.

Embedding and Loading

In working with data engineers and AI teams, they’ve expressed a consistent need for flexibility and composability for multimodal data pipelines. They’re seeing a lot of volatility in the path towards useful design patterns and solutions for the emerging AI stack. Given this learning, we’ve designed Datavolo to be agnostic to the embedding models, language models, and retrieval systems like vector databases that are being adopted by these teams.

Datavolo has done extensive work in the ecosystem to develop a large number of partner integrations with key parts of the AI stack. Datavolo delivers production-grade integrations, subjecting them to performance testing and secure release engineering, and commits to maintaining them over time as new functionality arises.

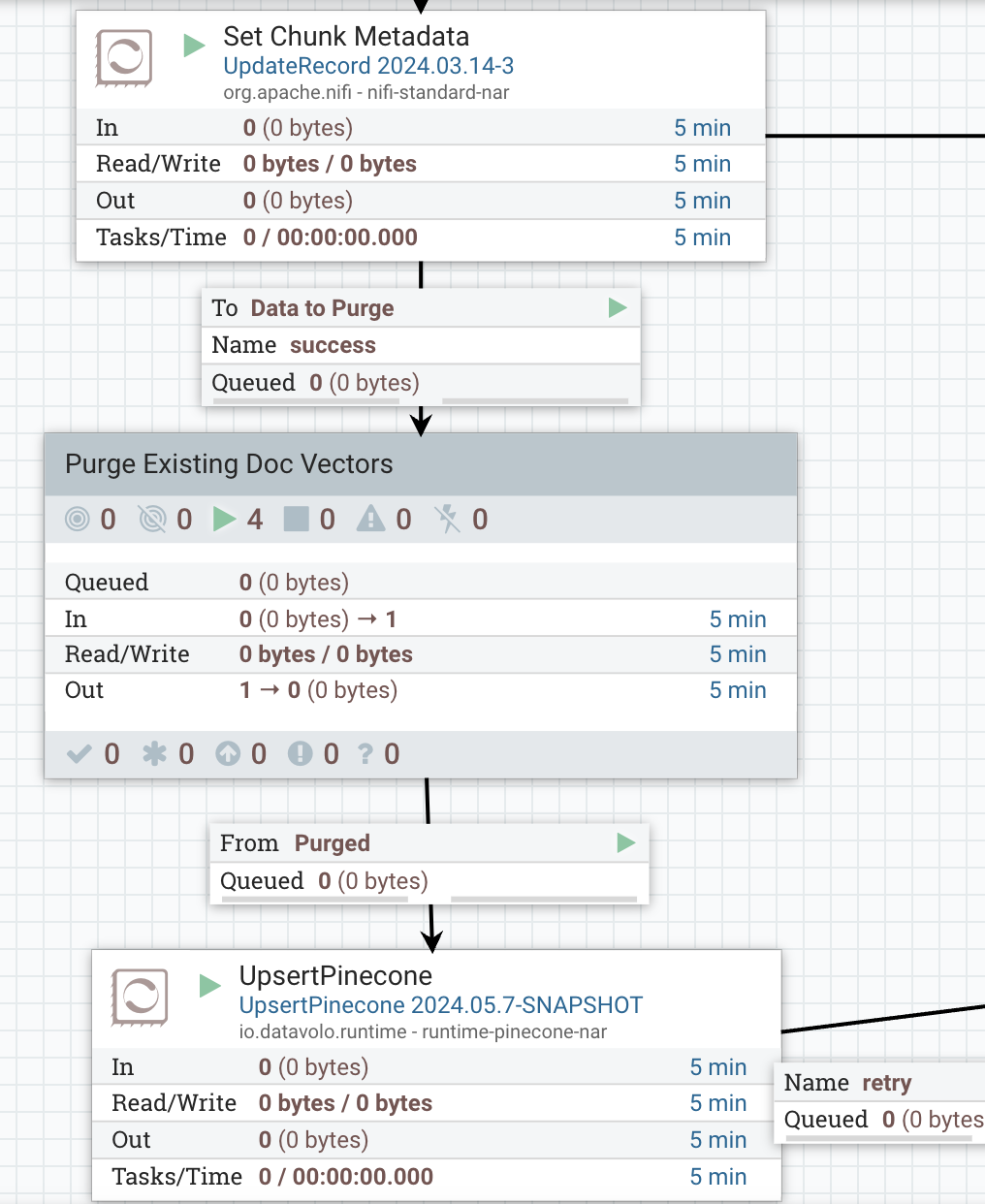

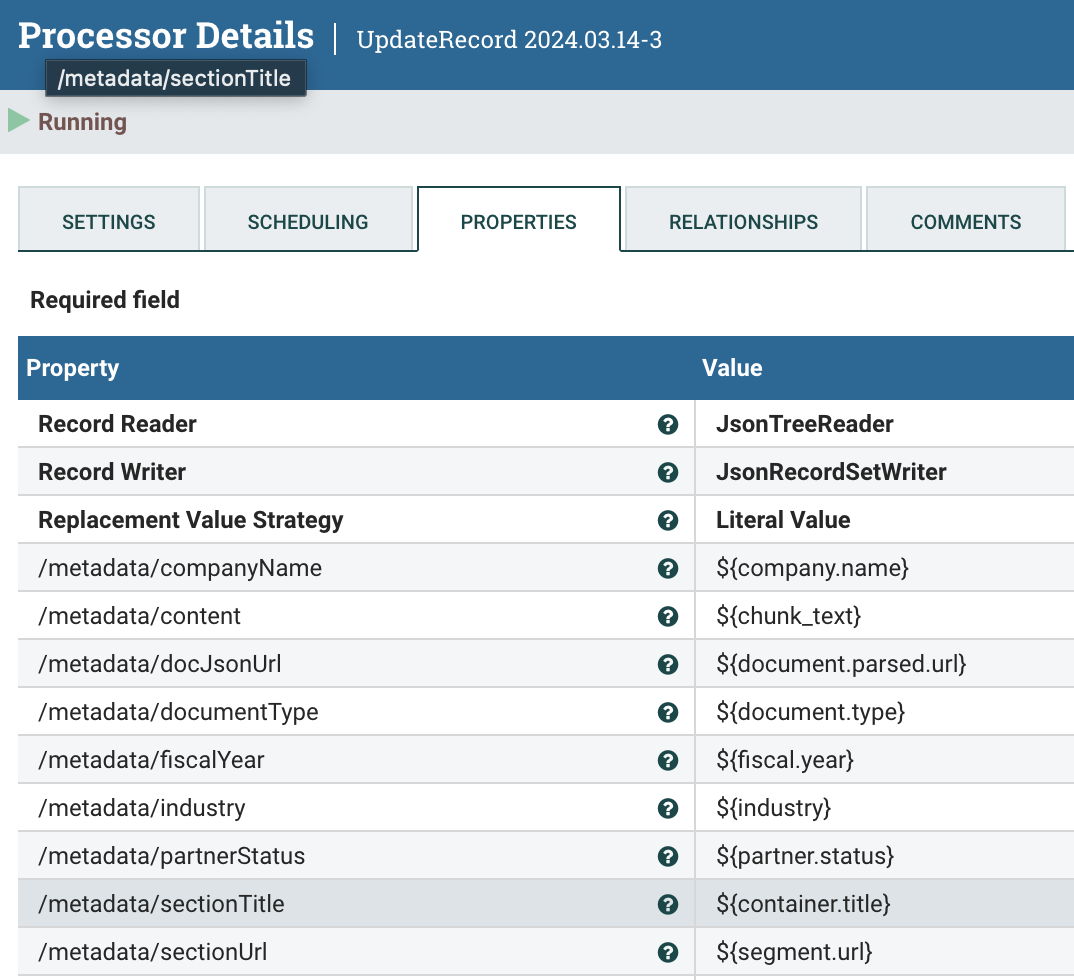

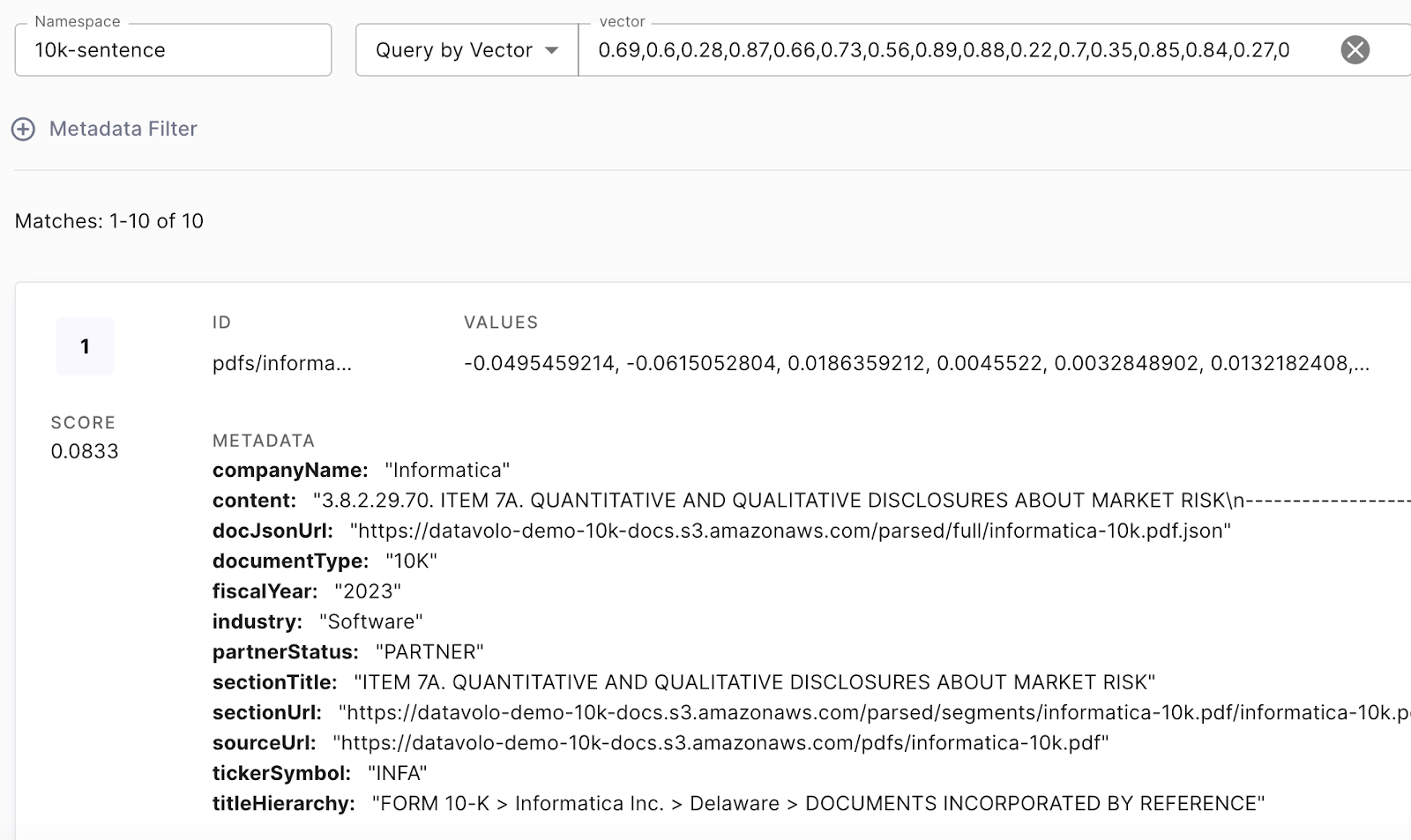

Let’s close by highlighting three important aspects of loading data into vector databases, using Pinecone as an example. First, when we write vectors to a vector database, it’s critical that we store both content (the embedding weights themselves) and context (the metadata associated with the chunks that generated these embeddings). This context supports hybrid search using metadata filtering combined with semantic search, as well as re-ranking logic that uses scores associated with the content. The hierarchy of the document chunks themselves is also important context and is captured by Datavolo’s data provenance capabilities to support advanced RAG patterns like small-to-big.

The second is that to empower data engineers to empirically evaluate retrieval effectiveness, Datavolo can dynamically populate different namespaces within a Pinecone index, based on combinations of different chunking and parsing strategies, supporting retrieval evaluation and A/B testing.

Finally, as Datavolo provides a continuous and automated platform for data ingestion, it’s important that changing data is captured appropriately as it’s sourced and fed to retrieval systems. As mentioned in the List/Fetch pattern, change is recognized via tracking strategies as new events and versions arrive at the source. Datavolo makes use of Pinecone’s upsert pattern, as well as selectively purging previously written records, to assure change is persisted appropriately without giving rise to expensive re-indexing operations.

Conclusion

Datavolo helps make advanced document processing secure, continuous, and easy. In addition to proving enterprise grade production data pipelines, effective document processing is an iterative process and Datavolo allows for rapid iteration and prototyping of different processing strategies.