Understanding RAG

Datavolo’s role in an end to end Generative AI system is the continous & automated change data capture, pre-processing, and delivery of unstructured data. These systems include Retrieval-Augmented Generation (RAG) applications. We don’t build Gen AI apps; we make sure your unstructured data is available to these systems.

We fuel RAG applications and our goal is to 10x the output of data engineers building data pipelines to power them. This page provides a conceptual overview of such a pipeline, but first let’s make sure we agree on the basic concepts of a RAG app itself.

Table of Contents

LLM Concepts

Before we go any further, let’s make sure we know what we are augmenting in the first place. This type of Artificial Intelligence (AI) app is meant to provide additional context to a Large Language Model (LLM). LLMs provide humanly readable responses to prompts presented to it. Most people think of ChatGPT based on their personal interactions and/or the media coverage it has received over the last couple of years.

There are many other LLMs out there, but for this discussion we will think of them as a concept and not differentiate among specific implementations. One of the issues with LLMs is that they are very computationally intensive to create (i.e. train in the AI vernacular). Not trying to get into the math and computer science details of training a model, but two terms that help explain it will also be useful when we explore the data pipeline aspects of this post.

- Chunks – LLMs are inputted with a massive amount of textual data. Much like how we as humans tackle problems by breaking them down into smaller pieces, the training process creates chunks of this input data. For simple understanding, think how a book is broken down into chapters which are then broken down into paragraphs.

- Embeddings – Computers don’t really speak languages such as English and Spanish, but they can create mathematical representations of those chunks. They can also determine appropriate associations between these “embeddings” that are calculated. I think our minds do something similar, finding embeddings of memories that are closely related to the topic we are thinking of. It is no wonder they call this a neural network.

Another issue with LLMs is that they only have access to publicly available information to be trained against. Large scale LLM providers may also have have additional information via partnerships. Most organizations have proprietary information that would be beneficial to include in the creation of a new LLM, but that cost to create them is usually prohibitive. Fortunately, LLMs allow a request to include additional information in the prompt which allows the model to produce a more relevant response.

As these technologies evolve and enterprises begin building, or fine-tuning, their own models there still be deltas of information since the LLM was last trained. RAG exists for these scenarios, too. Datavolo’s continuous & automated change data capture, pre-processing, and delivery of unstructured data is here to continue to fuel RAG applications today and in the future.

RAG Apps

RAG apps are doing just that. They retrieve additional context to include with the original prompt so the LLM can respond more appropriately – and doing so in a less costly way.

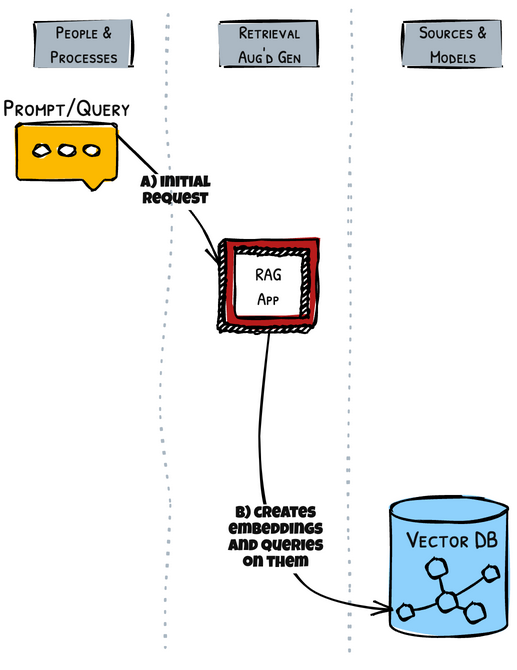

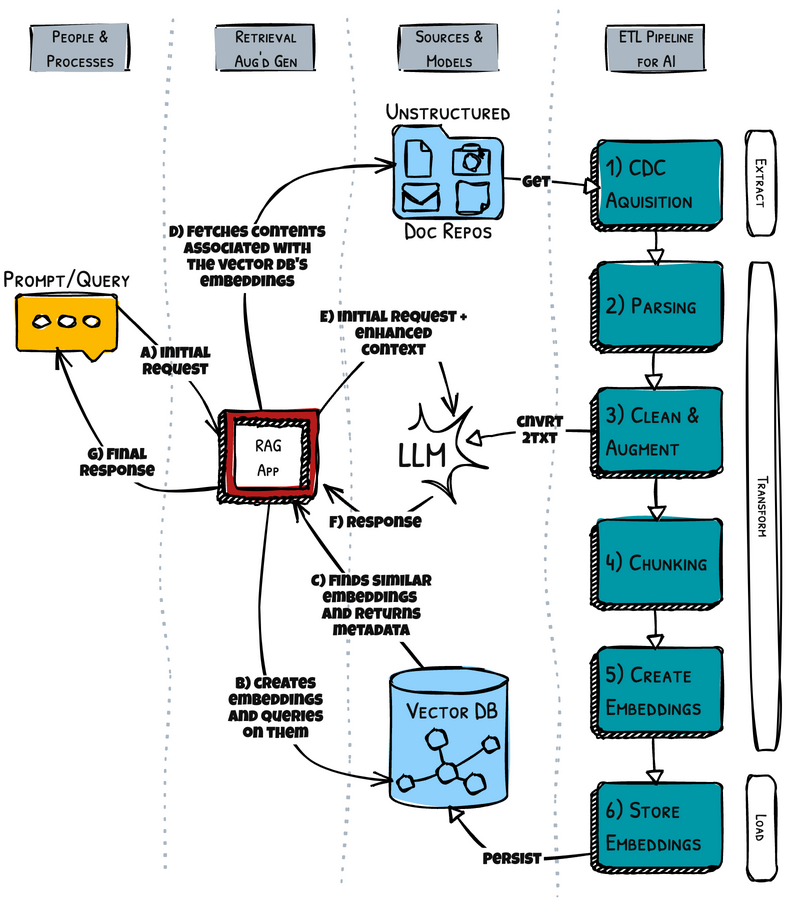

Query Vector Database

The initial processing steps of the RAG app are shown below. A) is the receiving of an initial prompt or query like for the person or processing needing a response. B) is visualizing that the RAG app needs to make embeddings of the request to search for closely related embeddings previously stored that relate to the proprietary organizational information that is available.

Those embeddings are stored in a special type of retrieval system called a vector database. It knows how to persist embeddings and then when presented with new ones it can provide those closely related embeddings we are looking for. We will discuss the creation of those embeddings in the data pipeline section of this post.

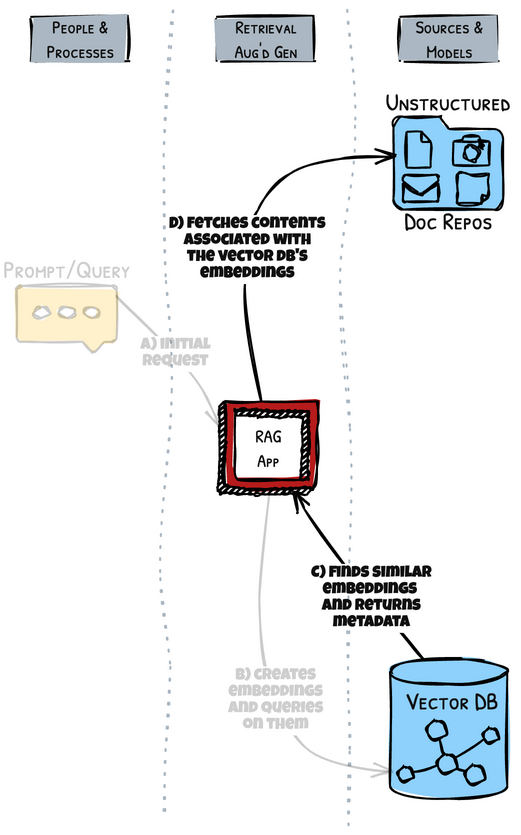

Retrieve actual information

The vector database can store the original chunk that was used to create an embedding, but as C) is showing it often only returns metadata related to the embedding. This metadata can include details about the origination of the embedding’s chunk. D) shows the RAG app retrieving the textual information from the unstructured proprietary documents that were chunked & embedded. Those steps will be explained in the data pipeline overview.

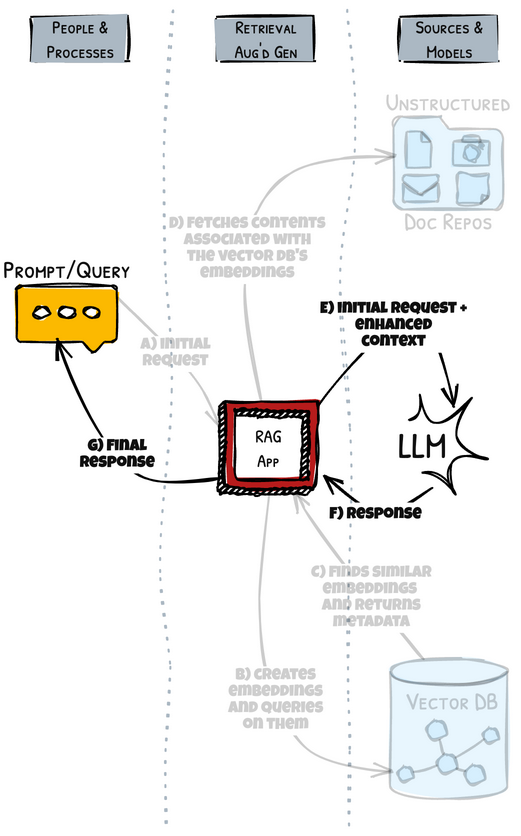

Augment the LLM Request

Now we E) send a better prompt to the LLM that includes the enhanced context along with the initial request. After F) a response is returned, the RAG app can G) pass on the answer to the person or process that initially made the request.

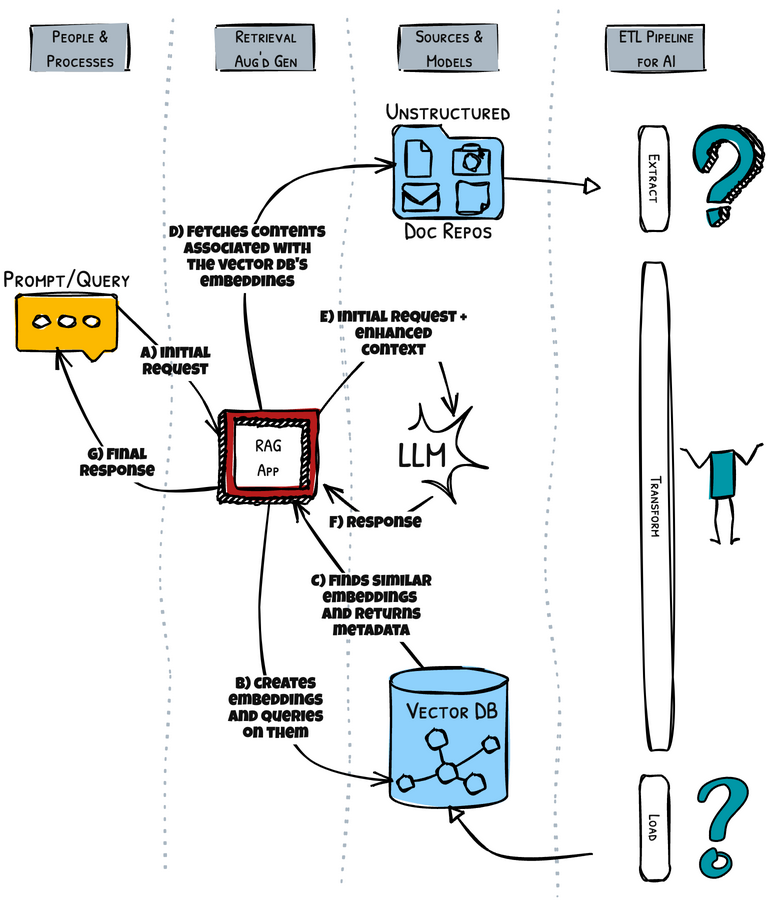

The Data Pipeline

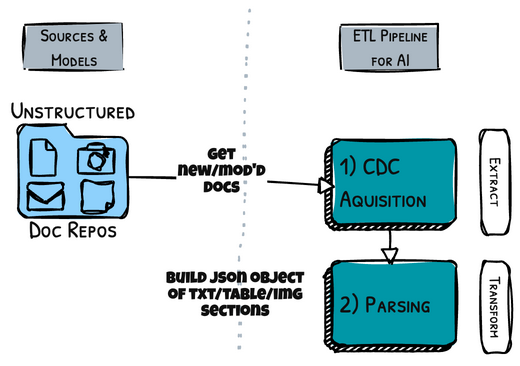

Now that we are familiar with LLMs and how RAG applications conceptually work, let’s hone in on the Extract – Transform – Load (ETL) data pipeline that is needed to make it all work. As the next diagram shows, we need to tackle a whole new swim lane.

Datavolo’s focus area is here in the data pipelining efforts for AI apps. We help you ensure your unique and valuable enterprise data is factored into the AI systems.

Extract

In 1) the pipeline acquires unstructured data. This extract step needs to account for new documents since the last time it ran AND anything that was modified since that same time.

After the docs have been retrieved 2) starts the transform steps by creating a JSON representation of the unstructured document. It uses visual clues like headings, paragraph breaks, and other content such as images and information in tabular format.

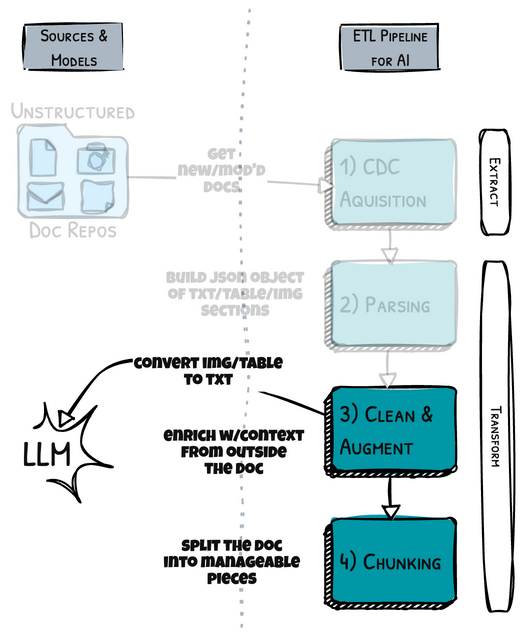

Transform

For the images and table-based data, 3) shows that LLMs and other AI tools can be used to convert them into text. This is also an opportunity to add additional data and metadata that could be known to be related to the document being processed. For example, if the document was an automotive service report, the Vehicle Identification Number (VIN) could be used to retrieve additional details such as make, model, and year of the automobile.

Another important transform task is 4) chunking as described at the beginning of this post. Breaking the complete document into more manageable elements (and maintaining their relationships) will help the end results of the pipeline be more meaningful when retrieving the data to augment the initial request to the LLM with.

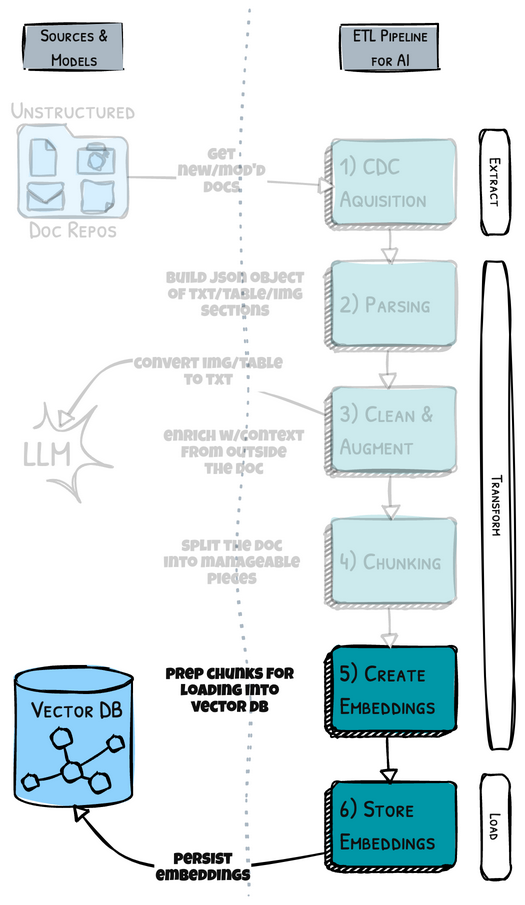

Load

Steps 5) and 6) are focusing on the embeddings creation process also discussed at the beginning of this post. Again, this is creating mathematical representations of the chunks that can then be loaded into a vector database.

Each step in this conceptual ETL pipeline should be extremely flexible. This includes swapping out different tools and AI models to be used (ex: converting images to text), allowing for more than a single approach (ex: using multiple chunking strategies) to be employed, and easily maintained (ex: storing embeddings in a different vector database).

Put it all Together

Traditional structured data pipelines are very different from AI data pipelines using unstructured documents. Data engineers will have to adapt to these conceptual ETL pipeline strategies and they deserve a framework that features an extreme amount of functionality, flexibility, and enables productivity.

Datavolo provides these needed features of functionality, flexibility, and productivity coupled with a low-code approach to pipeline construction that allows them to visually add processor boxes like shown above, connect them via drag and drop, and then configure the processors via properties and micro-coding to handle conditional expressions and attribute setting.

Datavolo allows custom-built processors to be programmed in Python and Java for those rare times when your pipeline’s logic just can’t be completed without it. Data lineage, real-time metrics, auto-scaling, and more features make Datavolo an even more powerful solution.

Are you ready to be a 10x data engineer for AI? Let us know how we can help!